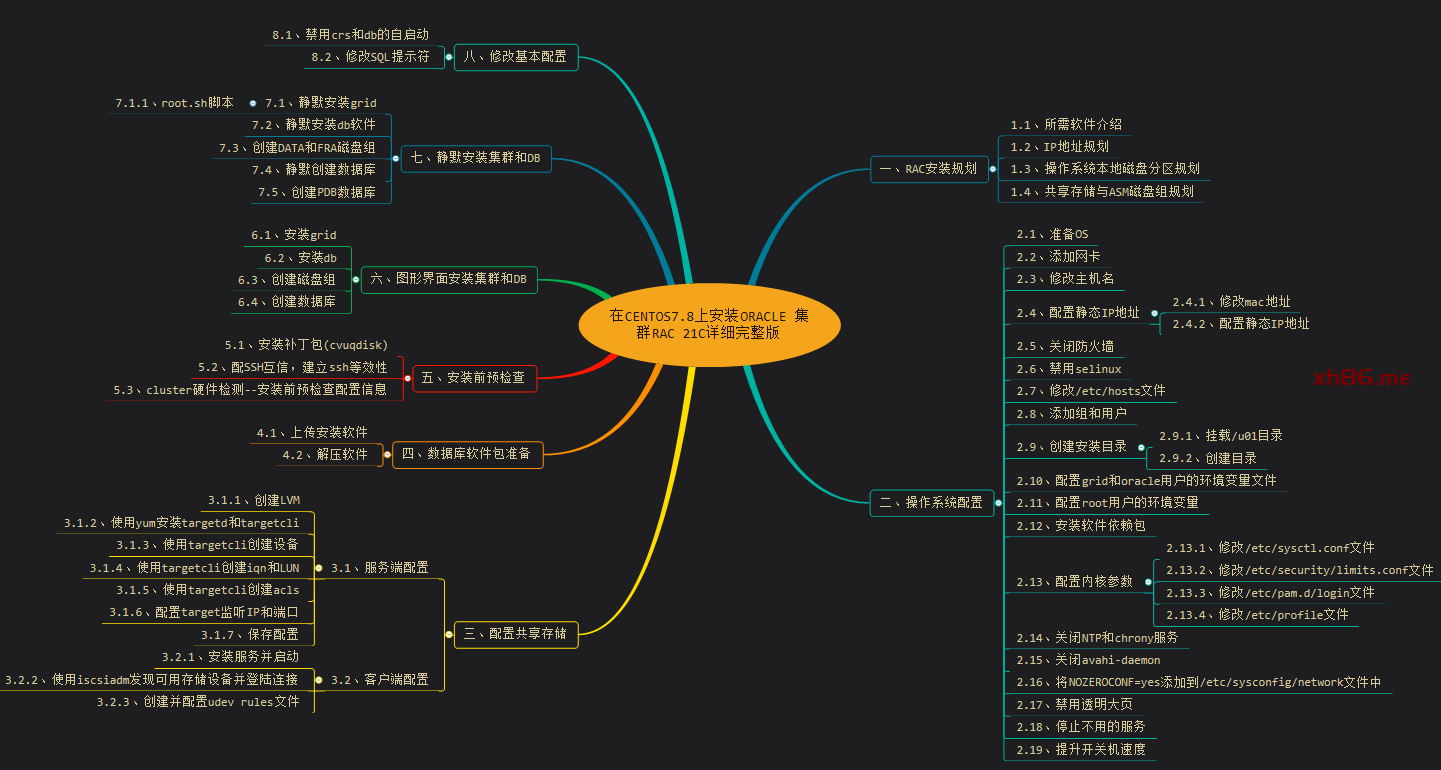

一、RAC安装规划

1.1、所需软件介绍

1.2、IP地址规划

1.3、操作系统本地磁盘分区规划

1.4、共享存储与ASM磁盘组规划

二、操作系统配置

2.1、准备OS

2.2、添加网卡

2.3、修改主机名

2.4、配置静态IP地址

2.4.1、修改mac地址

2.4.2、配置静态IP地址

2.5、关闭防火墙

2.6、禁用selinux

2.7、修改/etc/hosts文件

2.8、添加组和用户

2.9、创建安装目录

2.9.1、挂载/u01目录

2.9.2、创建目录

2.10、配置grid和Oracle用户的环境变量文件

2.11、配置root用户的环境变量

2.12、安装软件依赖包

2.13、配置内核参数

2.13.1、修改/etc/sysctl.conf文件

2.13.2、修改/etc/security/limits.conf文件

2.13.3、修改/etc/pam.d/login文件

2.13.4、修改/etc/profile文件

2.14、关闭NTP和chrony服务

2.15、关闭avahi-daemon

2.16、将NOZEROCONF=yes添加到/etc/sysconfig/network文件中

2.17、禁用透明大页

2.18、停止不用的服务

2.19、提升开关机速度

三、配置共享存储

3.1、服务端配置

3.1.1、创建LVM

3.1.2、使用yum安装targetd和targetcli

3.1.3、使用targetcli创建设备

3.1.4、使用targetcli创建iqn和LUN

3.1.5、使用targetcli创建acls

3.1.6、配置target监听IP和端口

3.1.7、保存配置

3.2、客户端配置

3.2.1、安装服务并启动

3.2.2、使用iscsiadm发现可用存储设备并登陆连接

3.2.3、创建并配置udev rules文件

四、数据库软件包准备

4.1、上传安装软件

4.2、解压软件

五、安装前预检查

5.1、安装补丁包(cvuqdisk)

5.2、配SSH互信,建立ssh等效性

5.3、cluster硬件检测–安装前预检查配置信息

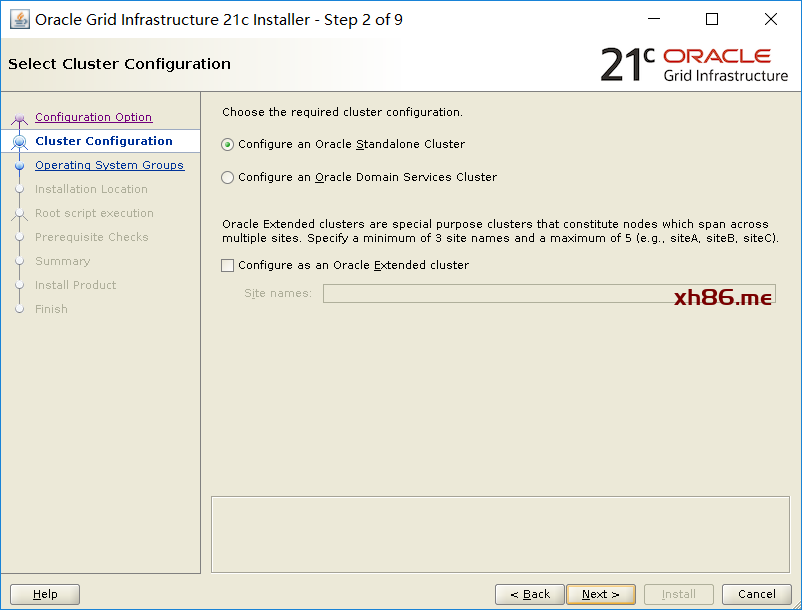

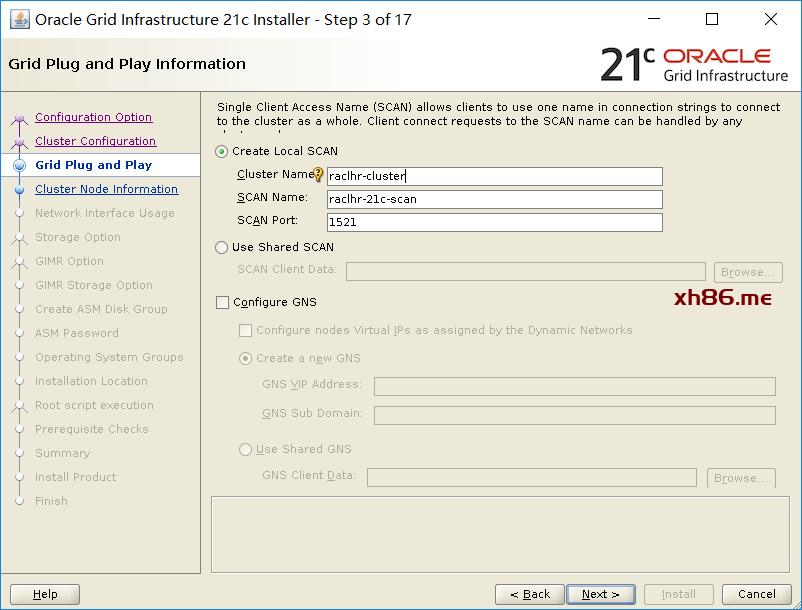

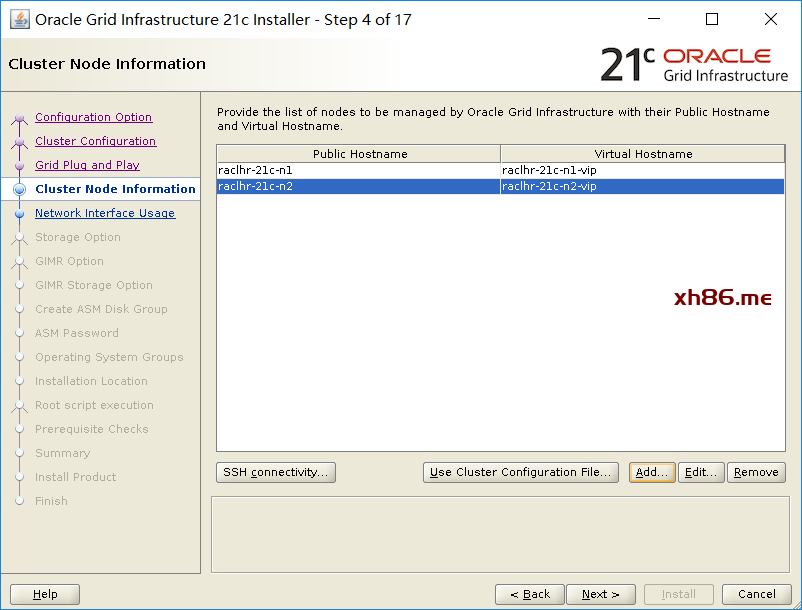

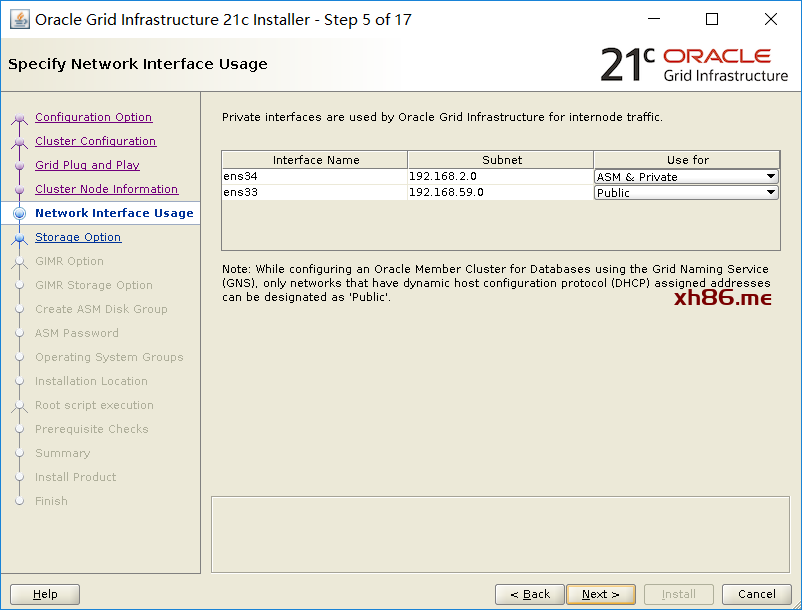

六、图形界面安装集群和db

6.1、安装grid

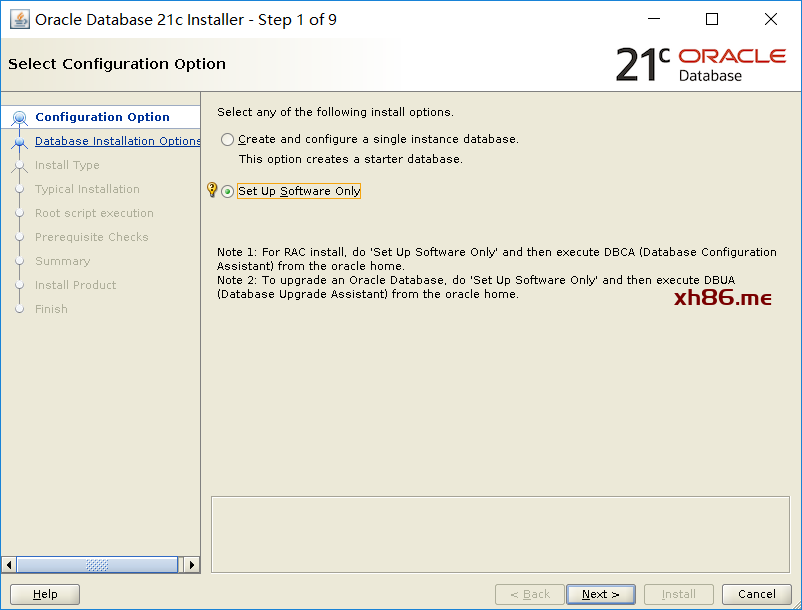

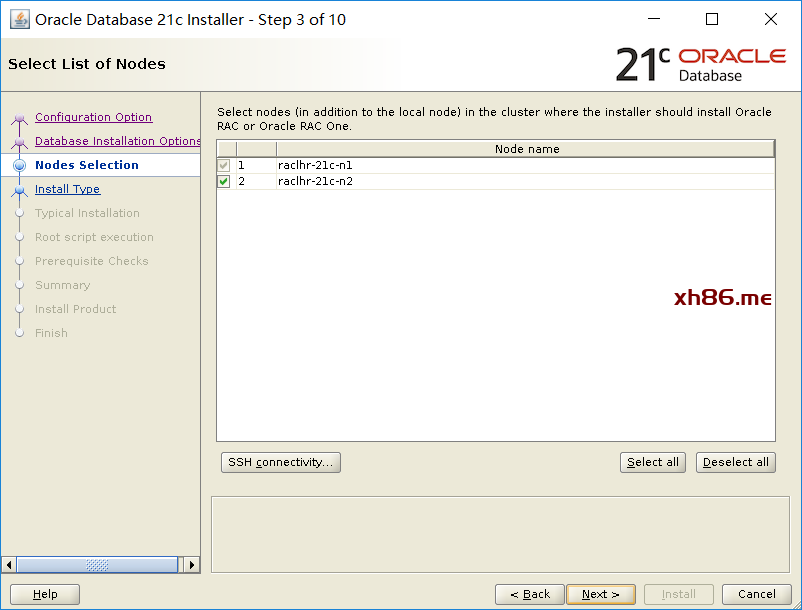

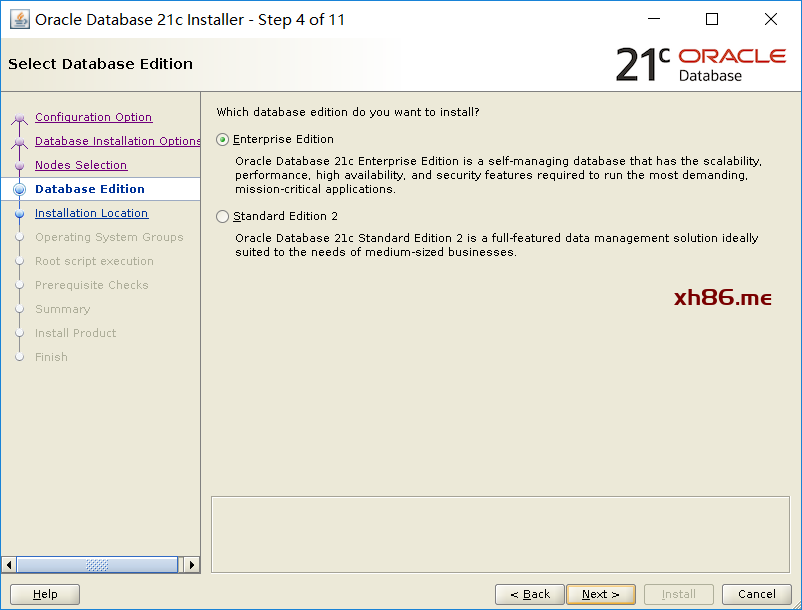

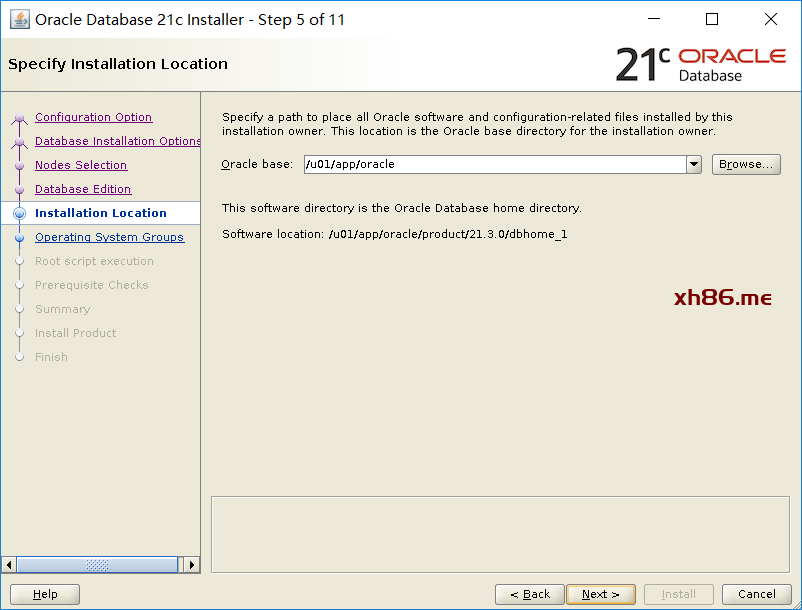

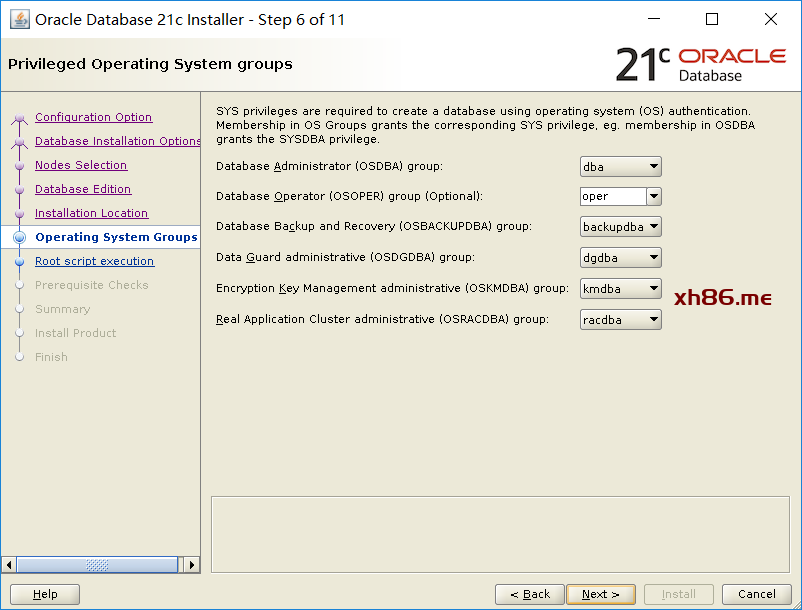

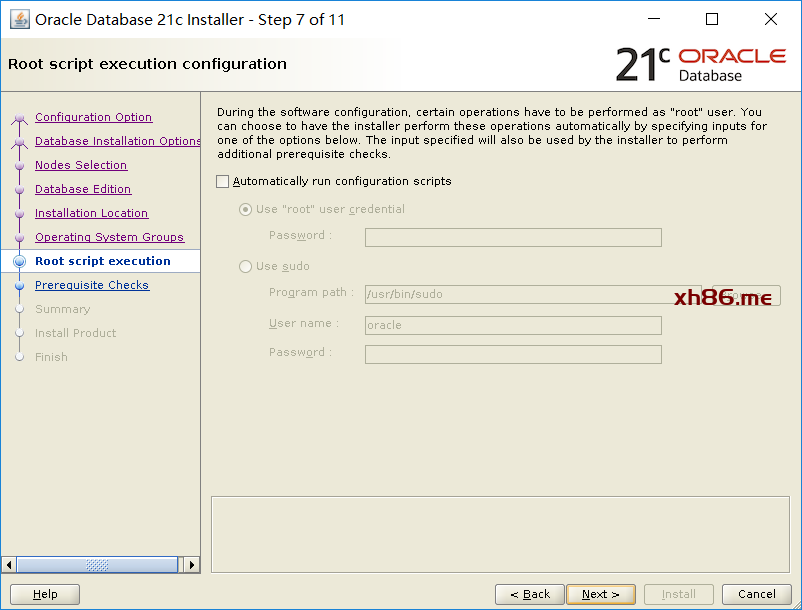

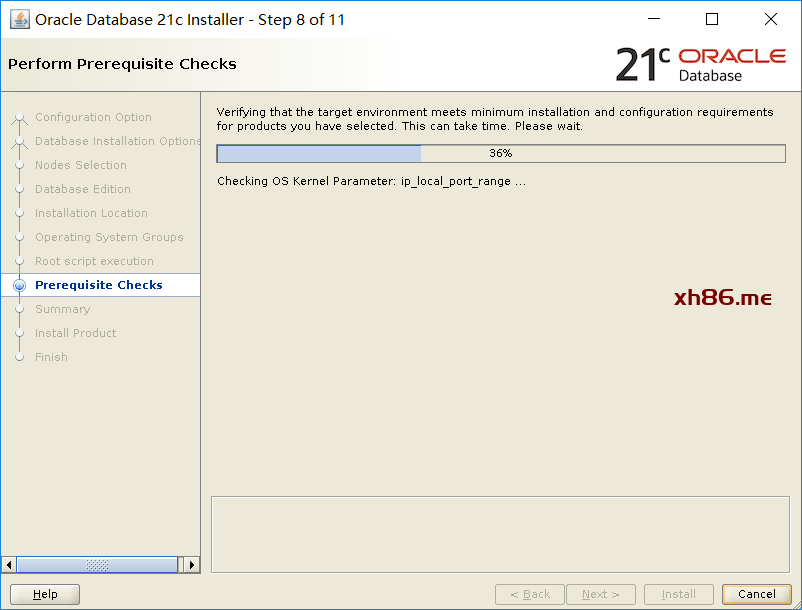

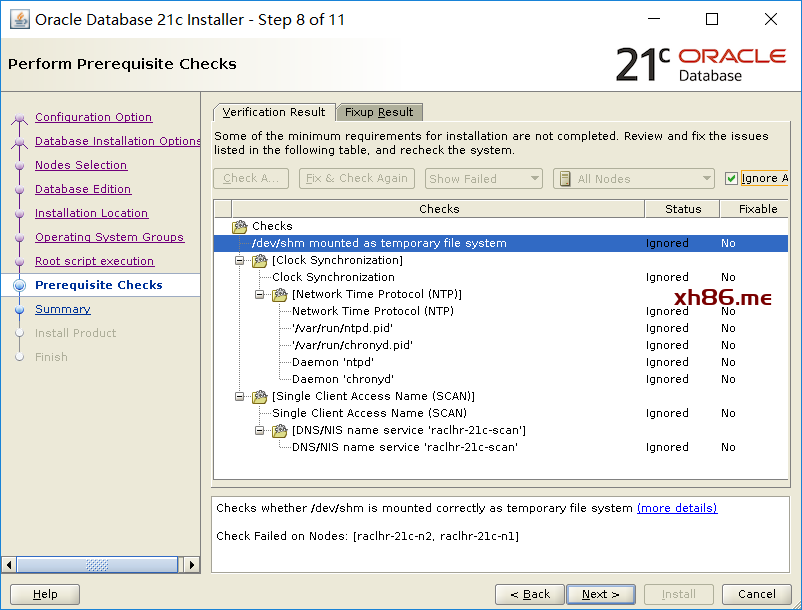

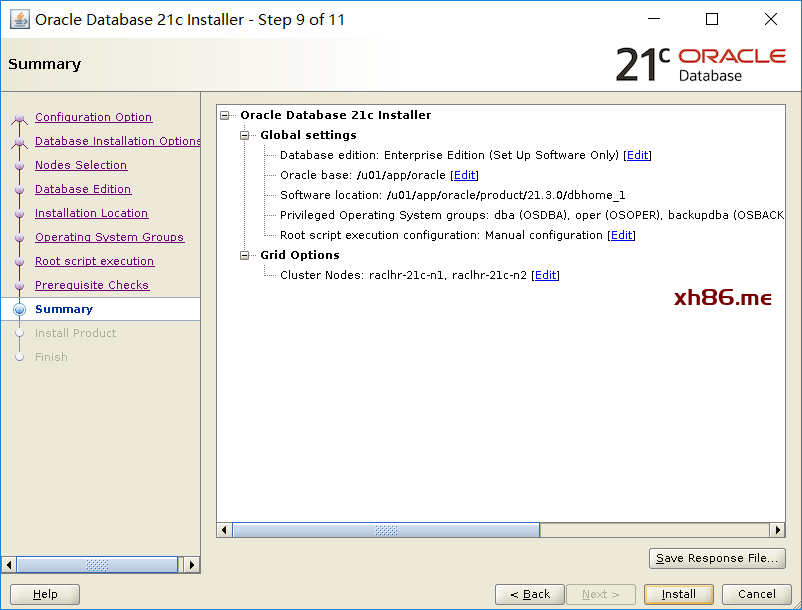

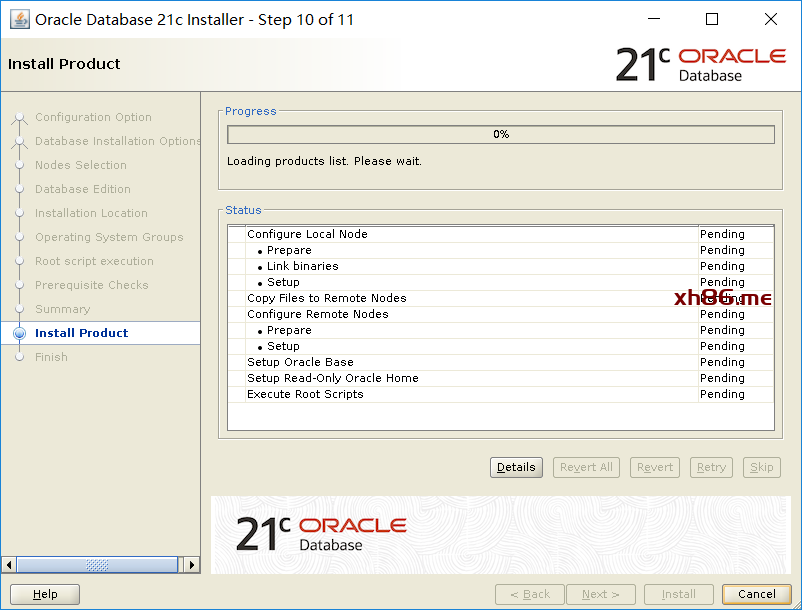

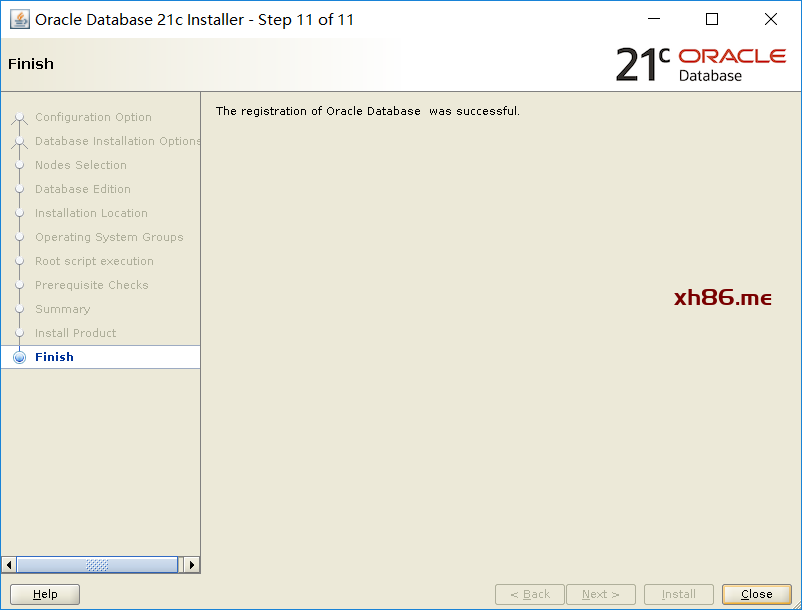

6.2、安装db

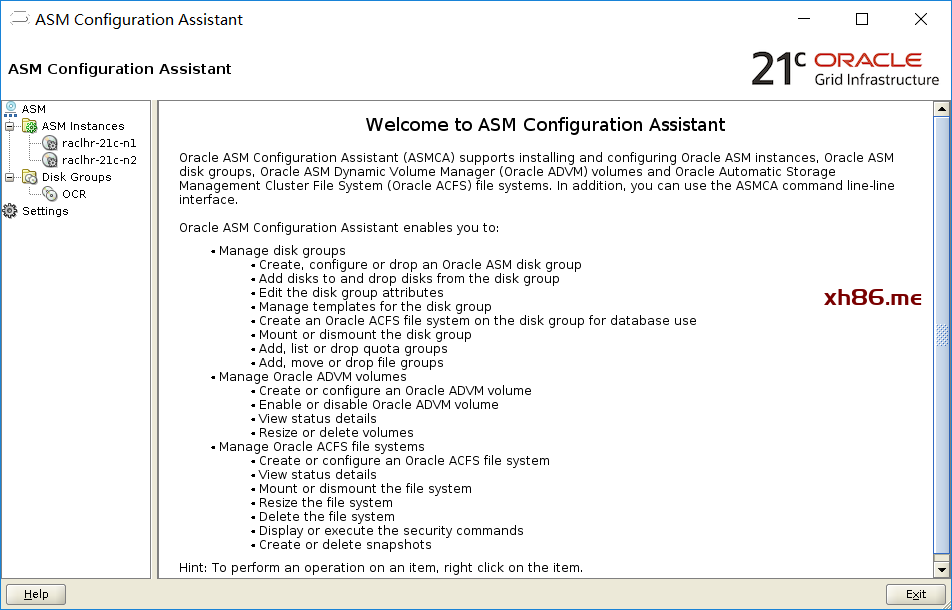

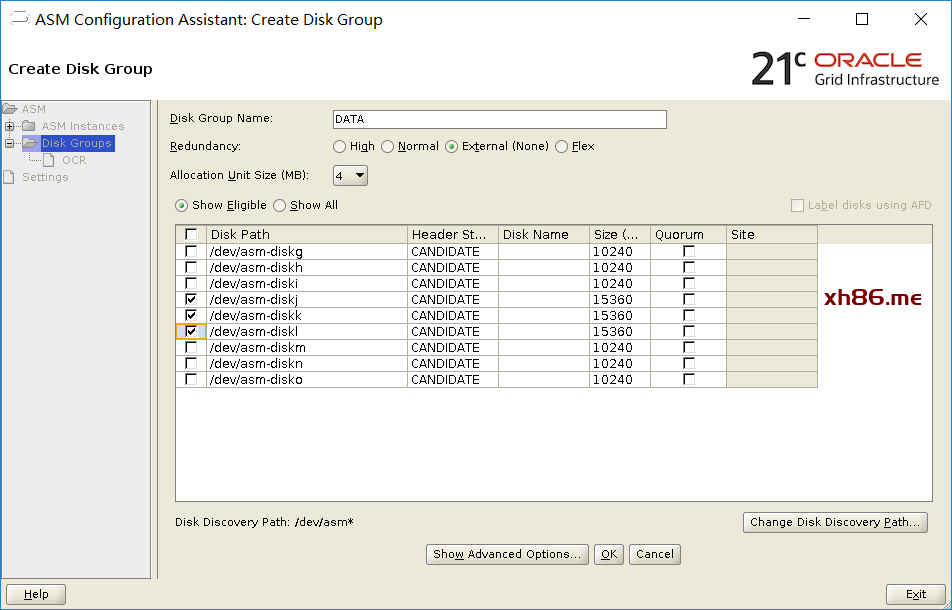

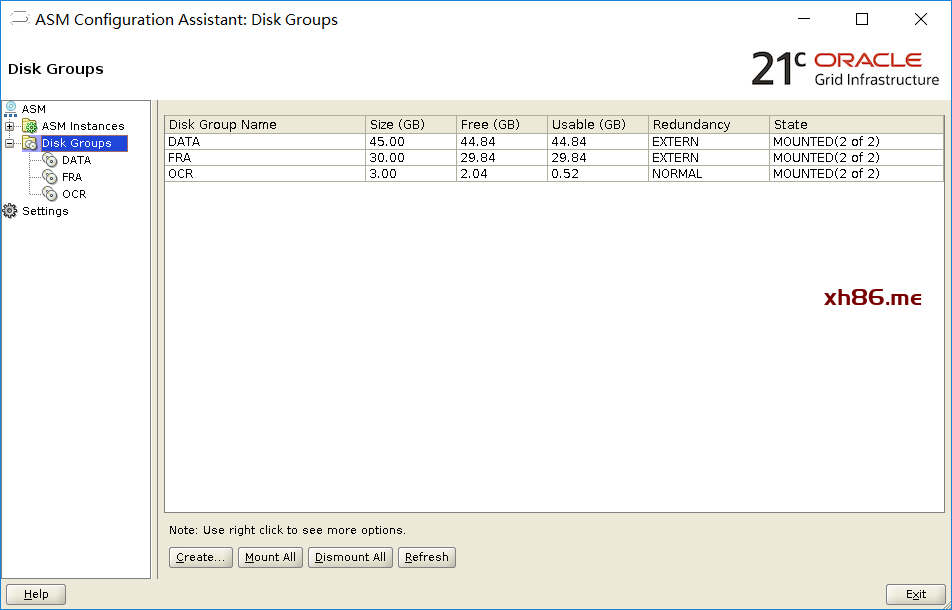

6.3、创建磁盘组

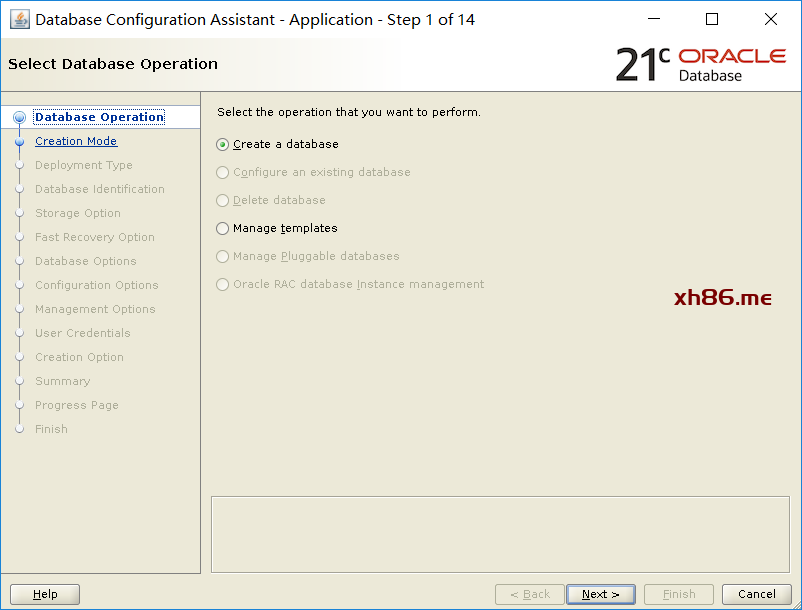

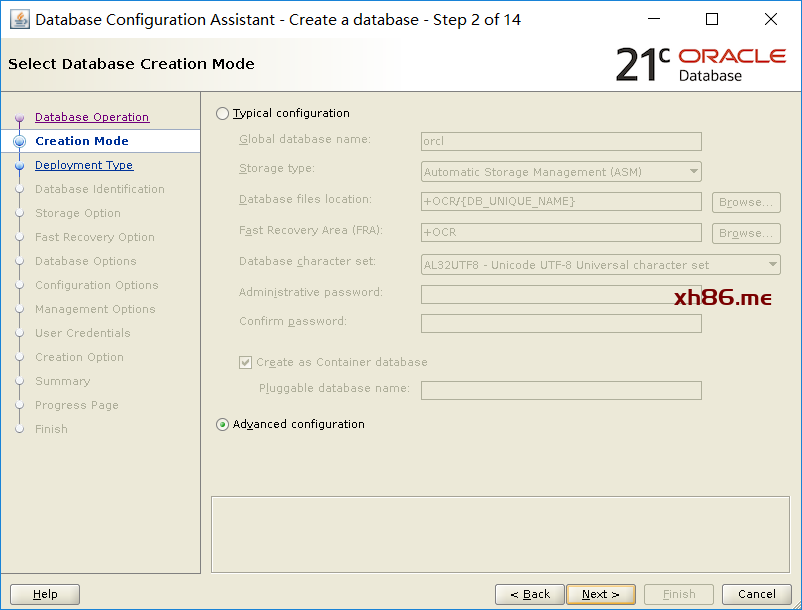

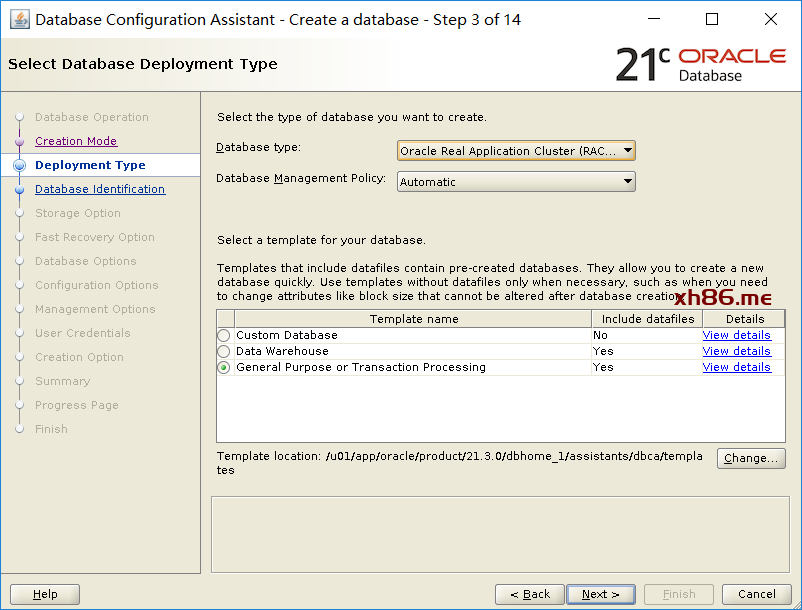

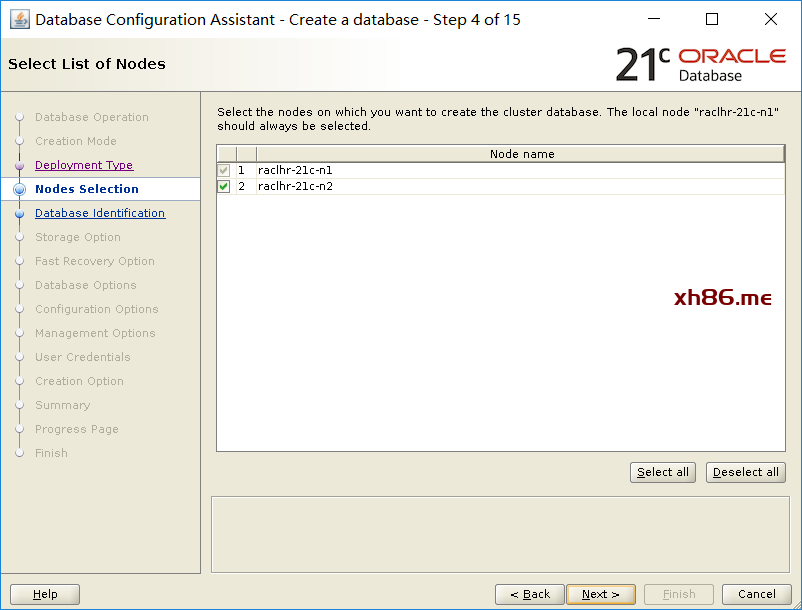

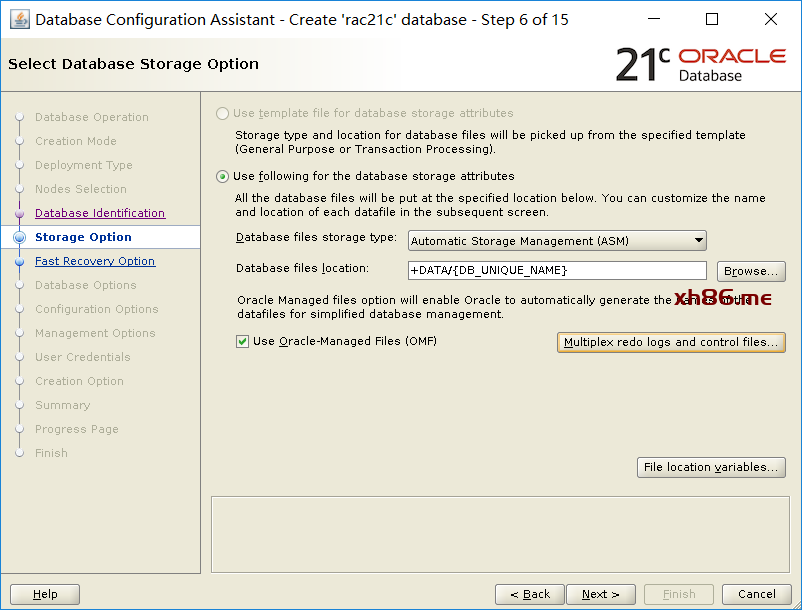

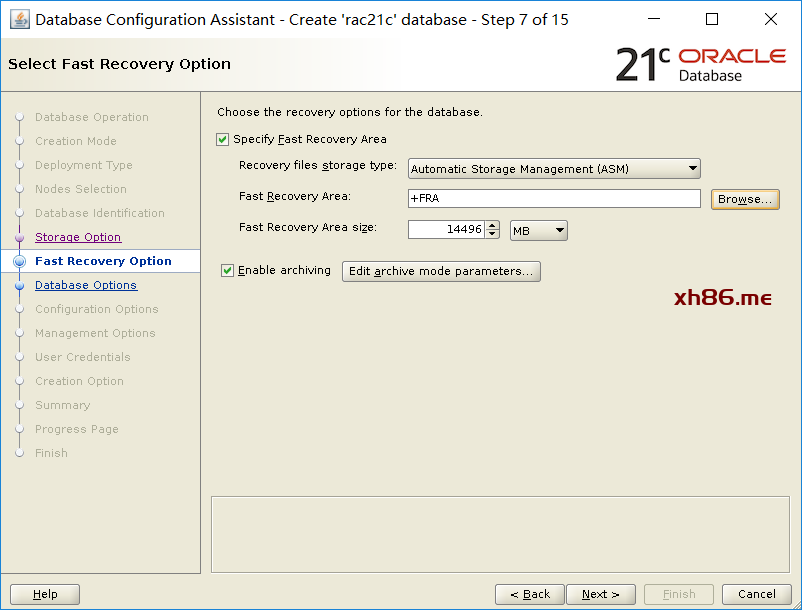

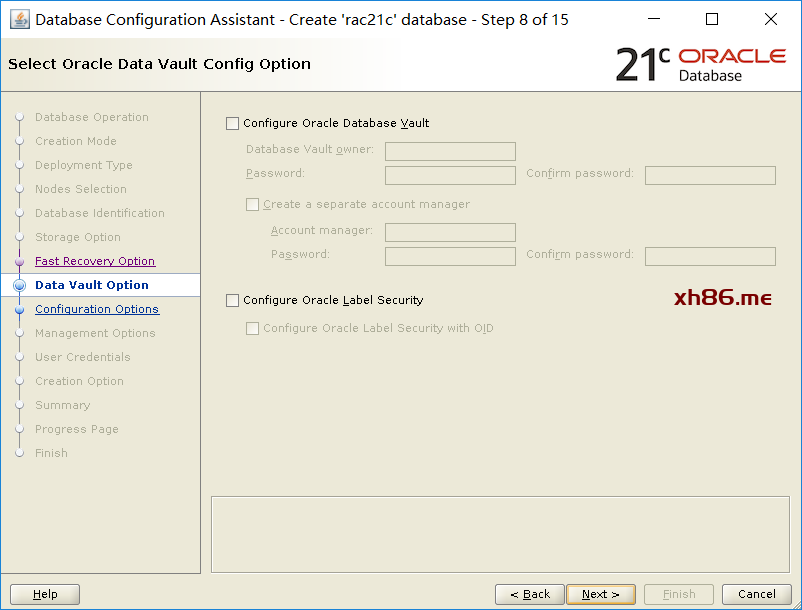

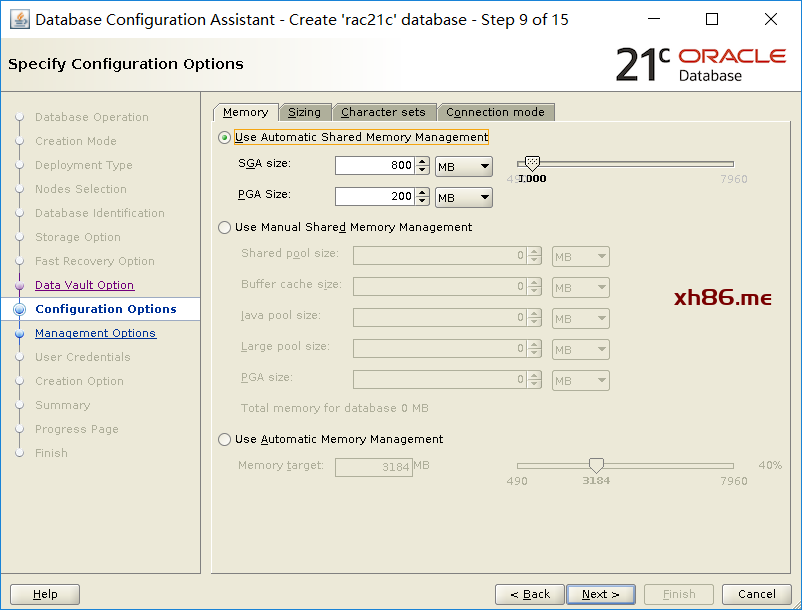

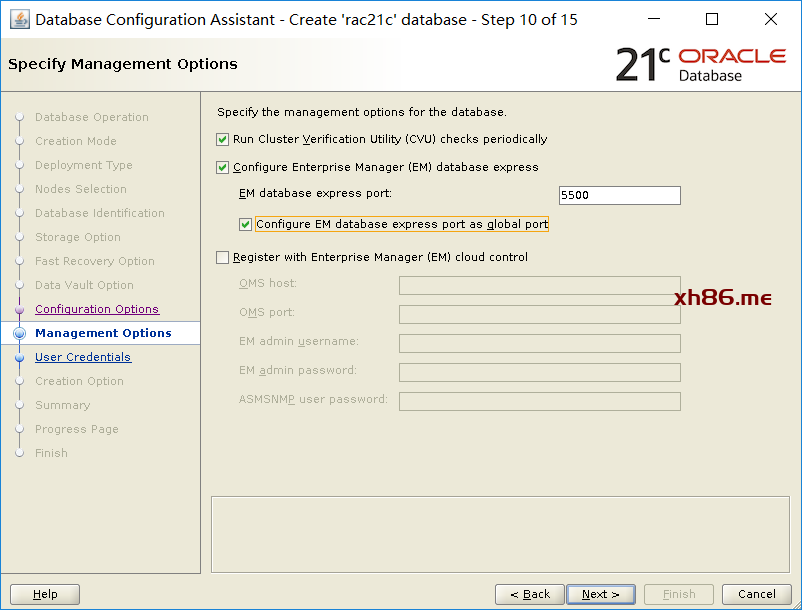

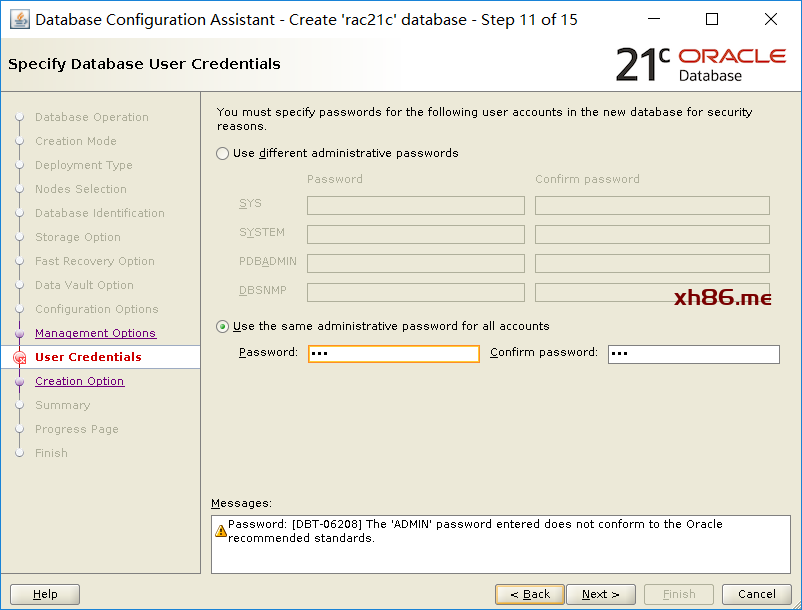

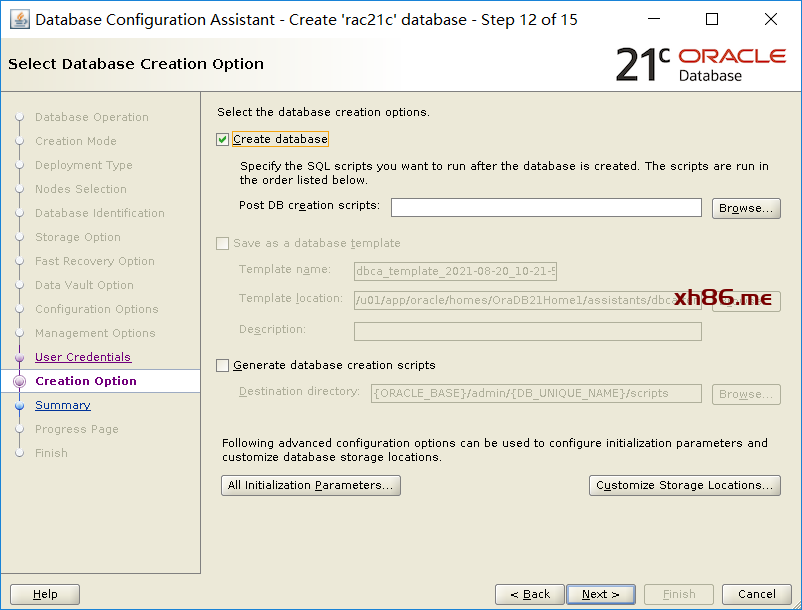

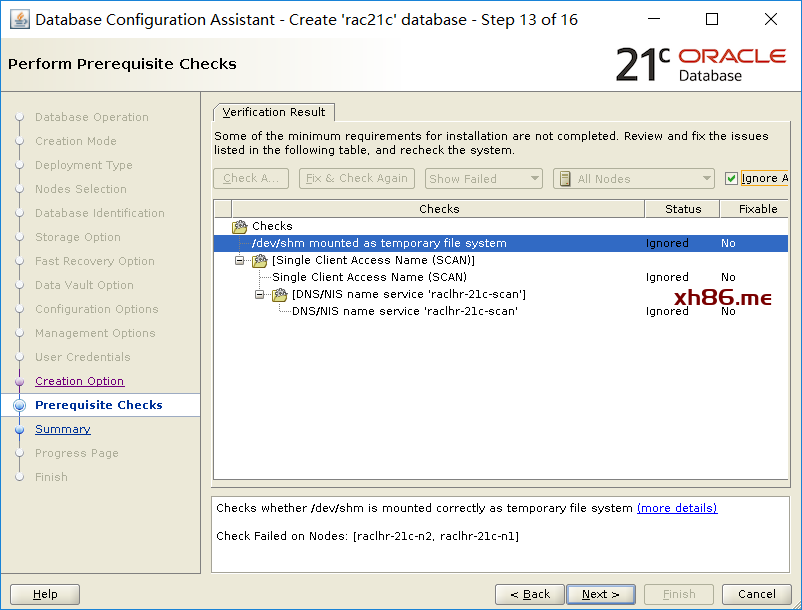

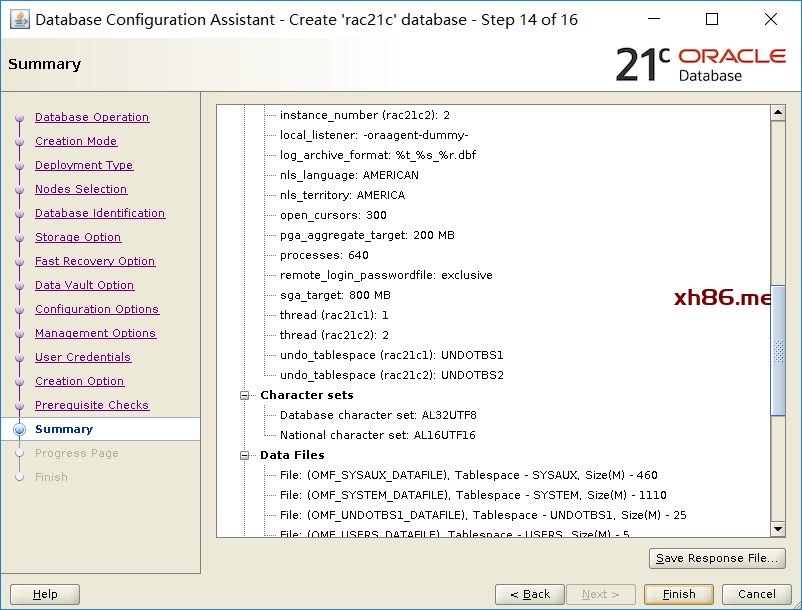

6.4、创建数据库

七、静默安装集群和db

7.1、静默安装grid

7.1.1、root.sh脚本

7.2、静默安装db软件

7.3、创建DATA和FRA磁盘组

7.4、静默创建数据库

7.5、创建PDB数据库

八、修改基本配置

8.1、禁用crs和db的自启动

8.2、修改SQL提示符

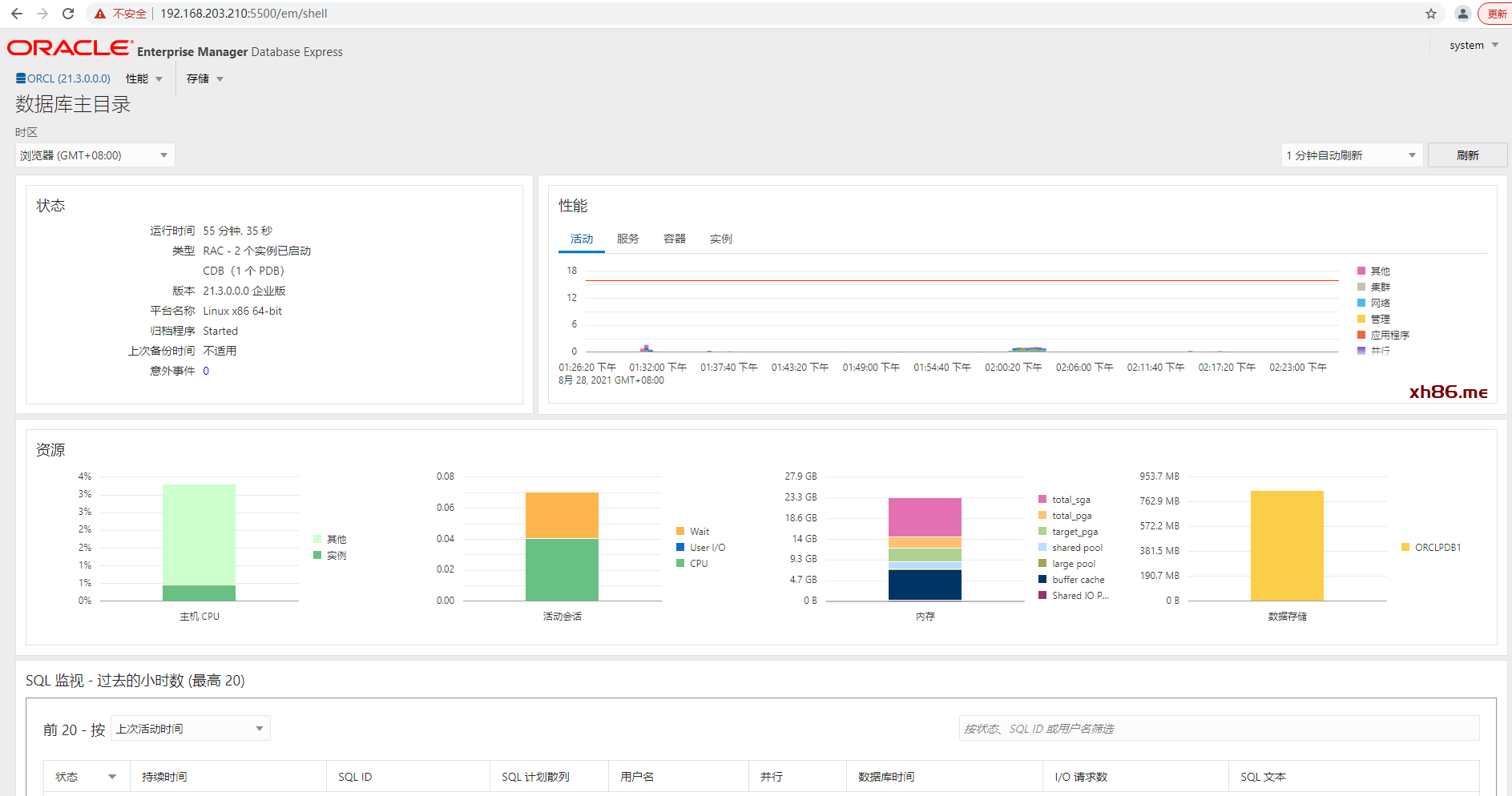

九、配置EM

一、Rac安装规划

官网安装过程:https://docs.oracle.com/en/database/oracle/oracle-database/21/rilin/index.html

1.1、所需软件介绍

Oracle RAC不支持异构平台。在同一个集群中,可以支持具有速度和规模不同的机器,但所有节点必须运行在相同的操作系统。Oracle RAC不支持具有不同的芯片架构的机器。

| 序号 | 类型 | 内容 | MD5 |

|---|---|---|---|

| 1 | 数据库 | LINUX.X64_213000_db_home.zip | 8ac915a800800ddf16a382506d3953db |

| 2 | 集群软件 | LINUX.X64_213000_grid_home.zip | b3fbdb7621ad82cbd4f40943effdd1be |

| 3 | 操作系统 | CentOS-7.8-x86_64-DVD-2003.iso | 16673979023254EA09CC0B57853A7BBD |

| 4 | 虚拟机软件 | VMware Workstation Pro 16.0.0 build-16894299 | |

| 5 | Xmanager Enterprise 4 | Xmanager Enterprise 4,用于打开图形界面 | |

| 6 | SecureCRTPortable.exe | Version 6.6.1 (build 289) ,带有SecureCRT和SecureFX,用于SSH连接和FTP上传 |

备注:

1、OS内存至少8G

2、安装OS和数据库前需要对下载好的安装包进行MD5值校验,检查其完整性

- 21c数据库软件下载可以参考:https://www.xmmup.com/dbbao76zaidockerzhongzhixu2bujikeyongyouoracle-21chuanjing.html

1.2、IP地址规划

从Oracle 11g开始,一共至少7个IP地址,2块网卡,其中public、vip和scan都在同一个网段,private在另一个网段,主机名不要包含下横线,如:RAC_01是不允许的;通过执行ifconfig -a检查2个节点的网卡名称必须一致。另外,在安装之前,公网、私网共4个IP可以ping通,其它3个不能ping通才是正常的。

从18c开始,scan建议至少3个。

| 节点/主机名 | IP Address | Interface Name | 地址类型 | 注册位置 | 虚拟网卡适配器 | 操作系统网卡 |

|---|---|---|---|---|---|---|

| raclhr-21c-n1 | 192.168.59.62 | raclhr-21c-n1 | Public | /etc/hosts | VMnet8(nat模式) | ens33 |

| raclhr-21c-n1 | 192.168.59.64 | raclhr-21c-n1-vip | Virtual | /etc/hosts | VMnet8(nat模式) | ens33 |

| raclhr-21c-n1 | 192.168.2.62 | raclhr-21c-n1-priv | Private | /etc/hosts | VMnet2(仅主机模式) | ens34 |

| raclhr-21c-n2 | 192.168.59.63 | raclhr-21c-n2 | Public | /etc/hosts | VMnet8(nat模式) | ens33 |

| raclhr-21c-n2 | 192.168.59.65 | raclhr-21c-n2-vip | Virtual | /etc/hosts | VMnet8(nat模式) | ens33 |

| raclhr-21c-n2 | 192.168.2.63 | raclhr-21c-n2-priv | Private | /etc/hosts | VMnet2(仅主机模式) | ens34 |

| 192.168.59.66 192.168.59.67 192.168.59.68 |

raclhr-21c-scan | SCAN | /etc/hosts | VMnet8(nat模式) | ens33 |

1.3、操作系统本地磁盘分区规划

除了/boot分区外,其它分区均采用逻辑卷的方式,这样有利于文件系统的扩展。

| 序号 | 分区名称 | 大小 | 逻辑卷 | 用途说明 |

|---|---|---|---|---|

| 1 | /u01 | 50G | /dev/mapper/vg_orasoft-lv_orasoft_u01 | oracle和grid的安装目录 |

| 2 | /soft | 20G | /dev/mapper/VG_OS-lv_VG_OS_soft | 存储软件,至少10G |

1.4、共享存储与ASM磁盘组规划

| 序号 | ASM磁盘名称 | 磁盘组名称 | 冗余方式 | 大小 | 用途 | 备注 |

|---|---|---|---|---|---|---|

| 1 | /dev/asm-diskd /dev/asm-diske /dev/asm-diskf |

OCR | Normal | 3G | OCR+VOTINGDISK | 最少1G |

| 2 | /dev/asm-diskg /dev/asm-diskh /dev/asm-diski |

MGMT | External | 30G | MGMT | 最少30G,可以选择不安装GIMR组件 |

| 3 | /dev/asm-diskj /dev/asm-diskk /dev/asm-disl |

DATA | External | 45G | 存储数据库数据文件 | |

| 4 | /dev/asm-diskm /dev/asm-diskn /dev/asm-disko |

FRA | External | 30G | 快速恢复区 |

二、操作系统配置

若无特殊说明,那么以下操作在2个节点均需要执行。

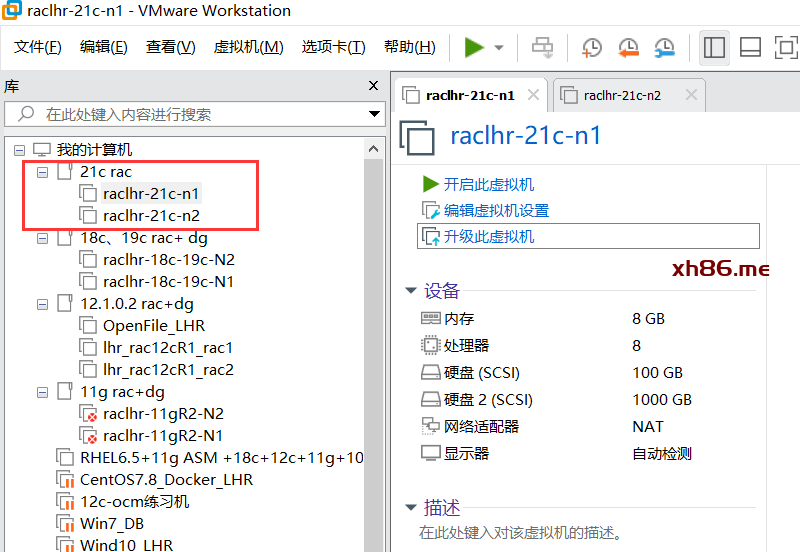

2.1、准备OS

安装一台虚拟机,然后复制改名,如下:

用虚拟机软件打开:

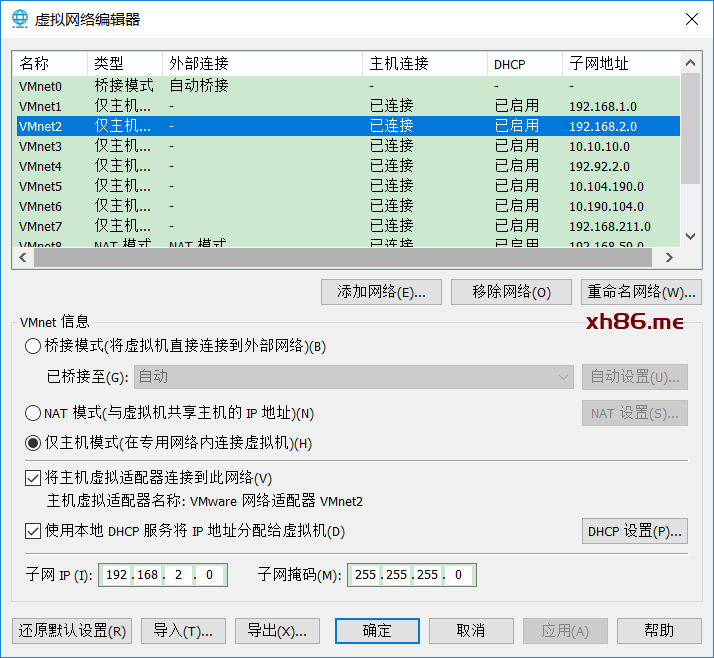

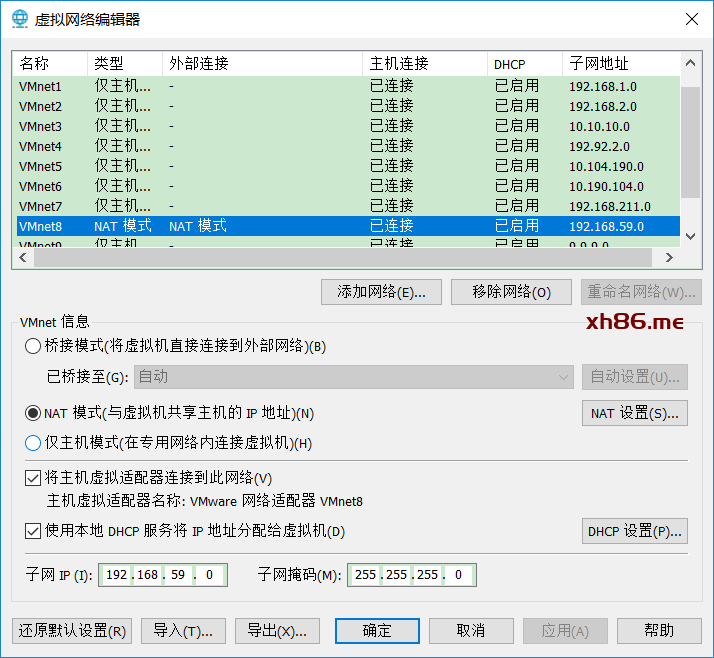

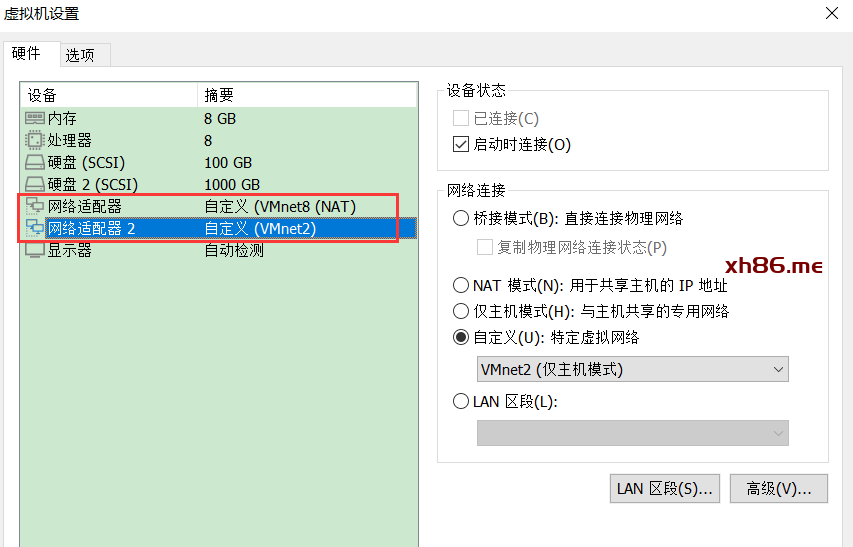

2.2、添加网卡

首先,需要添加网卡适配器:

VMnet2如下:

VMnet8如下:

网关为:192.168.59.2,如下所示:

先删除再添加,添加2块网卡,VMnet8为公有网卡,VMnet2为私有网卡,如下所示:

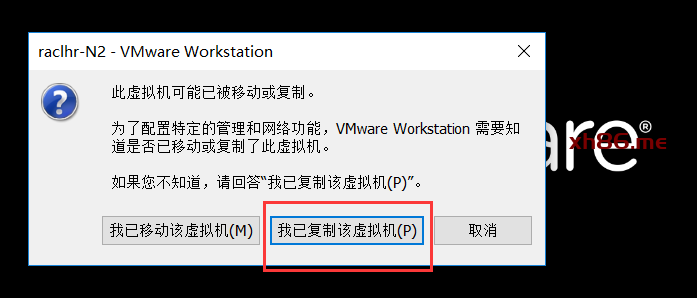

打开后,选择我已复制该虚拟机。

2.3、修改主机名

修改2个节点的主机名为raclhr-21c-n1和raclhr-21c-n2:

hostnamectl set-hostname raclhr-21c-n1

hostnamectl set-hostname raclhr-21c-n2

2.4、配置静态IP地址

在2个节点上分别配置静态IP地址。

在节点2上配置IP的时候注意将IP地址(IPADDR)修改掉。需要确保2个节点上的网卡MAC地址不一样,否则节点间不能通信。

2.4.1、修改mac地址

使用ifconfig或ip link show查看mac地址,修改文件/etc/udev/rules.d/70-persistent-ipoib.rules

cat > /etc/udev/rules.d/70–persistent–ipoib.rules <<“EOF”

SUBSYSTEM==“net”, ACTION==“add”, DRIVERS==“?*”, ATTR{address}==“00:0c:29:25:83:db”, ATTR{type}==“1”, KERNEL==“eth*”, NAME=“ens33”

SUBSYSTEM==“net”, ACTION==“add”, DRIVERS==“?*”, ATTR{address}==“00:0c:29:25:83:e5”, ATTR{type}==“1”, KERNEL==“eth*”, NAME=“ens34”

EOF

2.4.2、配置静态IP地址

注意修改IPADDR和HWADDR地址。

1、配置公网:/etc/sysconfig/network-scripts/ifcfg-ens33

cat > /etc/sysconfig/network-scripts/ifcfg-ens33 <<“EOF”

DEVICE=ens33

NAME=ens33

IPADDR=192.168.59.62

NETMASK=255.255.255.0

GATEWAY=192.168.59.2

ONBOOT=yes

USERCTL=no

BOOTPROTO=static

HWADDR=00:0c:29:25:83:db

TYPE=Ethernet

IPV6INIT=no

DNS1=114.114.114.114

DNS2=8.8.8.8

NM_CONTROLLED=no

EOF

2、配置私网:/etc/sysconfig/network-scripts/ifcfg-ens34 。注意第二块网卡不能配置网关,否则系统默认网关就变成了第二块网卡的网关,从而导致系统不能上外网。

cat > /etc/sysconfig/network-scripts/ifcfg-ens34 <<“EOF”

DEVICE=ens34

NAME=ens34

IPADDR=192.168.2.62

NETMASK=255.255.255.0

ONBOOT=yes

USERCTL=no

BOOTPROTO=static

HWADDR=00:0c:29:25:83:e5

TYPE=Ethernet

IPV6INIT=no

DNS1=114.114.114.114

DNS2=8.8.8.8

NM_CONTROLLED=no

EOF

该部分内容做完后,可以重启一次OS。

2.5、关闭防火墙

systemctl disable firewalld

systemctl stop firewalld

systemctl status firewalld

systemctl list-unit-files | grep fire

2.6、禁用selinux

手工修改/etc/selinux/config的SELINUX=disabled,或使用下面命令:

sed -i ‘/^SELINUX=.*/ s//SELINUX=disabled/’ /etc/selinux/config

重启才能生效,校验:

[root@raclhr-21c-n1~]#getenforce

Disabled

2.7、修改/etc/hosts文件

增加如下的内容:

#Public IP

192.168.59.62 raclhr-21c-n1

192.168.59.63 raclhr-21c-n2

#Private IP

192.168.2.62 raclhr-21c-n1-priv

192.168.2.63 raclhr-21c-n2-priv

#Virtual IP

192.168.59.64 raclhr-21c-n1-vip

192.168.59.65 raclhr-21c-n2-vip

#Scan IP

192.168.59.66 raclhr-21c-scan

192.168.59.67 raclhr-21c-scan

192.168.59.68 raclhr-21c-scan

注意:需要保留127.0.0.1 localhost这行。

2.8、添加组和用户

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

groupadd -g 54324 backupdba

groupadd -g 54325 dgdba

groupadd -g 54326 kmdba

groupadd -g 54327 asmdba

groupadd -g 54328 asmoper

groupadd -g 54329 asmadmin

groupadd -g 54330 racdba

useradd -u 54321 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle

useradd -u 54322 -g oinstall -G asmadmin,asmdba,asmoper,dba,racdba grid

echo lhr | passwd –stdin oracle

echo lhr | passwd –stdin grid

2.9、创建安装目录

2.9.1、挂载/u01目录

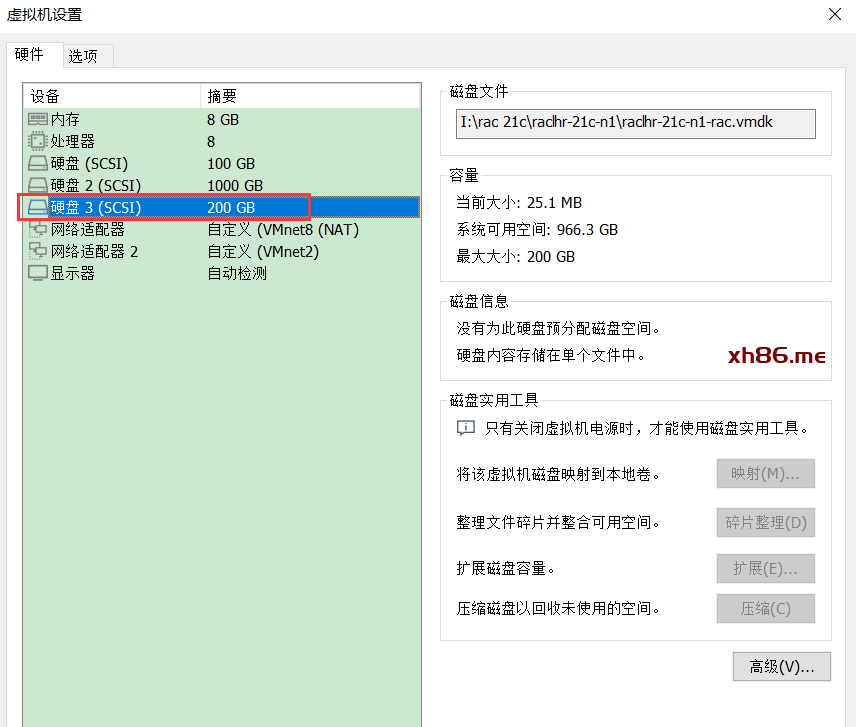

首先,在2个节点都各自添加一块200g大小的磁盘:

然后做卷组,200g磁盘大约分10个PE,每个PE大约20g:

[root@raclhr-21c-n1 ~]# fdisk -l | grep sd

Disk /dev/sdb: 1073.7 GB, 1073741824000 bytes, 2097152000 sectors

/dev/sdb1 2048 209717247 104857600 8e Linux LVM

/dev/sdb2 209717248 419432447 104857600 8e Linux LVM

/dev/sdb3 419432448 629147647 104857600 8e Linux LVM

/dev/sdb4 629147648 2097151999 734002176 5 Extended

/dev/sdb5 629149696 838864895 104857600 8e Linux LVM

/dev/sdb6 838866944 1048582143 104857600 8e Linux LVM

/dev/sdb7 1048584192 1258299391 104857600 8e Linux LVM

/dev/sdb8 1258301440 1468016639 104857600 8e Linux LVM

/dev/sdb9 1468018688 1677733887 104857600 8e Linux LVM

/dev/sdb10 1677735936 1887451135 104857600 8e Linux LVM

/dev/sdb11 1887453184 2097151999 104849408 8e Linux LVM

Disk /dev/sda: 107.4 GB, 107374182400 bytes, 209715200 sectors

/dev/sda1 * 2048 2099199 1048576 83 Linux

/dev/sda2 2099200 180248575 89074688 8e Linux LVM

Disk /dev/sdc: 214.7 GB, 214748364800 bytes, 419430400 sectors

[root@raclhr-21c-n1 ~]# fdisk /dev/sdc

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x6d06be8a.

Command (m for help): m

Command action

a toggle a bootable flag

b edit bsd disklabel

c toggle the dos compatibility flag

d delete a partition

g create a new empty GPT partition table

G create an IRIX (SGI) partition table

l list known partition types

m print this menu

n add a new partition

o create a new empty DOS partition table

p print the partition table

q quit without saving changes

s create a new empty Sun disklabel

t change a partition’s system id

u change display/entry units

v verify the partition table

w write table to disk and exit

x extra functionality (experts only)

Command (m for help): l

0 Empty 24 NEC DOS 81 Minix / old Lin bf Solaris

1 FAT12 27 Hidden NTFS Win 82 Linux swap / So c1 DRDOS/sec (FAT-

2 XENIX root 39 Plan 9 83 Linux c4 DRDOS/sec (FAT-

3 XENIX usr 3c PartitionMagic 84 OS/2 hidden C: c6 DRDOS/sec (FAT-

4 FAT16 <32M 40 Venix 80286 85 Linux extended c7 Syrinx

5 Extended 41 PPC PReP Boot 86 NTFS volume set da Non-FS data

6 FAT16 42 SFS 87 NTFS volume set db CP/M / CTOS / .

7 HPFS/NTFS/exFAT 4d QNX4.x 88 Linux plaintext de Dell Utility

8 AIX 4e QNX4.x 2nd part 8e Linux LVM df BootIt

9 AIX bootable 4f QNX4.x 3rd part 93 Amoeba e1 DOS access

a OS/2 Boot Manag 50 OnTrack DM 94 Amoeba BBT e3 DOS R/O

b W95 FAT32 51 OnTrack DM6 Aux 9f BSD/OS e4 SpeedStor

c W95 FAT32 (LBA) 52 CP/M a0 IBM Thinkpad hi eb BeOS fs

e W95 FAT16 (LBA) 53 OnTrack DM6 Aux a5 FreeBSD ee GPT

f W95 Ext’d (LBA) 54 OnTrackDM6 a6 OpenBSD ef EFI (FAT-12/16/

10 OPUS 55 EZ-Drive a7 NeXTSTEP f0 Linux/PA-RISC b

11 Hidden FAT12 56 Golden Bow a8 Darwin UFS f1 SpeedStor

12 Compaq diagnost 5c Priam Edisk a9 NetBSD f4 SpeedStor

14 Hidden FAT16 <3 61 SpeedStor ab Darwin boot f2 DOS secondary

16 Hidden FAT16 63 GNU HURD or Sys af HFS / HFS+ fb VMware VMFS

17 Hidden HPFS/NTF 64 Novell Netware b7 BSDI fs fc VMwareVMKCORE

18 AST SmartSleep 65 Novell Netware b8 BSDI swap fd Linux raid auto

1b Hidden W95 FAT3 70 DiskSecure Mult bb Boot Wizard hid fe LANstep

1c Hidden W95 FAT3 75 PC/IX be Solaris boot ff BBT

1e Hidden W95 FAT1 80 Old Minix

Command (m for help): p

Disk /dev/sdc: 214.7 GB, 214748364800 bytes, 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x6d06be8a

Device Boot Start End Blocks Id System

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default1):

First sector (2048-419430399, default2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-419430399, default 419430399): +20G

Partition 1 of type Linux and of size 20 GiB is set

Command (m for help): n

Partition type:

p primary (1 primary, 0 extended, 3 free)

e extended

Select (defaultp):

Using default response p

Partition number (2-4, default2):

First sector (41945088-419430399, default41945088):

Using default value 41945088

Last sector, +sectors or +size{K,M,G} (41945088-419430399, default 419430399): +20G

Partition 2 of type Linux and of size 20 GiB is set

Command (m for help): n

Partition type:

p primary (2 primary, 0 extended, 2 free)

e extended

Select (defaultp):

Using default response p

Partition number (3,4, default3):

First sector (83888128-419430399, default83888128):

Using default value 83888128

Last sector, +sectors or +size{K,M,G} (83888128-419430399, default 419430399): +20G

Partition 3 of type Linux and of size 20 GiB is set

Command (m for help): n

Partition type:

p primary (3 primary, 0 extended, 1 free)

e extended

Select (defaulte):

Using default response e

Selected partition 4

First sector (125831168-419430399, default125831168):

Using default value 125831168

Last sector, +sectors or +size{K,M,G} (125831168-419430399, default419430399):

Using default value 419430399

Partition 4 of type Extended and of size 140 GiB is set

Command (m for help): n

All primary partitions are in use

Adding logical partition 5

First sector (125833216-419430399, default125833216):

Using default value 125833216

Last sector, +sectors or +size{K,M,G} (125833216-419430399, default 419430399): +20G

Partition 5 of type Linux and of size 20 GiB is set

Command (m for help): n

All primary partitions are in use

Adding logical partition 6

First sector (167778304-419430399, default167778304):

Using default value 167778304

Last sector, +sectors or +size{K,M,G} (167778304-419430399, default 419430399): +20G

Partition 6 of type Linux and of size 20 GiB is set

Command (m for help): n

All primary partitions are in use

Adding logical partition 7

First sector (209723392-419430399, default209723392):

Using default value 209723392

Last sector, +sectors or +size{K,M,G} (209723392-419430399, default 419430399): +20G

Partition 7 of type Linux and of size 20 GiB is set

Command (m for help): n

All primary partitions are in use

Adding logical partition 8

First sector (251668480-419430399, default251668480):

Using default value 251668480

Last sector, +sectors or +size{K,M,G} (251668480-419430399, default 419430399): +20G

Partition 8 of type Linux and of size 20 GiB is set

Command (m for help): n

All primary partitions are in use

Adding logical partition 9

First sector (293613568-419430399, default293613568):

Using default value 293613568

Last sector, +sectors or +size{K,M,G} (293613568-419430399, default 419430399): +20G

Partition 9 of type Linux and of size 20 GiB is set

Command (m for help): n

All primary partitions are in use

Adding logical partition 10

First sector (335558656-419430399, default335558656):

Using default value 335558656

Last sector, +sectors or +size{K,M,G} (335558656-419430399, default 419430399): +20G

Partition 10 of type Linux and of size 20 GiB is set

Command (m for help): n

All primary partitions are in use

Adding logical partition 11

First sector (377503744-419430399, default377503744):

Using default value 377503744

Last sector, +sectors or +size{K,M,G} (377503744-419430399, default 419430399): +20G

Value out of range.

Last sector, +sectors or +size{K,M,G} (377503744-419430399, default419430399):

Using default value 419430399

Partition 11 of type Linux and of size 20 GiB is set

Command (m for help): p

Disk /dev/sdc: 214.7 GB, 214748364800 bytes, 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x6d06be8a

Device Boot Start End Blocks Id System

/dev/sdc1 2048 41945087 20971520 83 Linux

/dev/sdc2 41945088 83888127 20971520 83 Linux

/dev/sdc3 83888128 125831167 20971520 83 Linux

/dev/sdc4 125831168 419430399 146799616 5 Extended

/dev/sdc5 125833216 167776255 20971520 83 Linux

/dev/sdc6 167778304 209721343 20971520 83 Linux

/dev/sdc7 209723392 251666431 20971520 83 Linux

/dev/sdc8 251668480 293611519 20971520 83 Linux

/dev/sdc9 293613568 335556607 20971520 83 Linux

/dev/sdc10 335558656 377501695 20971520 83 Linux

/dev/sdc11 377503744 419430399 20963328 83 Linux

Command (m for help): t

Partition number (1-11, default 11): 1

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default 11): 2

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default 11): 3

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default 11): 5

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default 11): 6

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default 11): 7

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default 11): 8

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default 11): 9

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default 11): 10

Hex code (type L to list all codes): 8e

Changed type of partition ‘Linux’ to ‘Linux LVM’

Command (m for help): t

Partition number (1-11, default11):

Hex code (type L to list all codes): 8e

Changed type of partition ‘Empty’ to ‘Linux LVM’

Command (m for help): p

Disk /dev/sdc: 214.7 GB, 214748364800 bytes, 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x6d06be8a

Device Boot Start End Blocks Id System

/dev/sdc1 2048 41945087 20971520 8e Linux LVM

/dev/sdc2 41945088 83888127 20971520 8e Linux LVM

/dev/sdc3 83888128 125831167 20971520 8e Linux LVM

/dev/sdc4 125831168 419430399 146799616 5 Extended

/dev/sdc5 125833216 167776255 20971520 8e Linux LVM

/dev/sdc6 167778304 209721343 20971520 8e Linux LVM

/dev/sdc7 209723392 251666431 20971520 8e Linux LVM

/dev/sdc8 251668480 293611519 20971520 8e Linux LVM

/dev/sdc9 293613568 335556607 20971520 8e Linux LVM

/dev/sdc10 335558656 377501695 20971520 8e Linux LVM

/dev/sdc11 377503744 419430399 20963328 8e Linux LVM

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

分区完成后,开始创建逻辑卷,挂载分区等操作:

pvcreate /dev/sdc1 /dev/sdc2 /dev/sdc3 /dev/sdc5 /dev/sdc6 /dev/sdc7 /dev/sdc8 /dev/sdc9 /dev/sdc10 /dev/sdc11

vgcreate vg_oracle /dev/sdc1 /dev/sdc2 /dev/sdc3 /dev/sdc5 /dev/sdc6 /dev/sdc7 /dev/sdc8 /dev/sdc9 /dev/sdc10 /dev/sdc11

lvcreate -n lv_orasoft_u01 -L 60G vg_oracle

mkfs.ext4 /dev/vg_oracle/lv_orasoft_u01

mkdir /u01

mount /dev/vg_oracle/lv_orasoft_u01 /u01

echo “/dev/vg_oracle/lv_orasoft_u01 /u01 ext4 defaults 0 0” >> /etc/fstab

查询:

[root@raclhr-21c-n1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 3.9G 13M 3.9G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/mapper/centos_lhrdocker-root 50G 5.0G 42G 11% /

/dev/sda1 976M 143M 767M 16% /boot

/dev/mapper/centos_lhrdocker-home 9.8G 41M 9.2G 1% /home

/dev/mapper/vg_docker-lv_docker 788G 73M 748G 1% /var/lib/docker

tmpfs 797M 12K 797M 1% /run/user/42

tmpfs 797M 0 797M 0% /run/user/0

/dev/mapper/vg_oracle-lv_orasoft_u01 59G 53M 56G 1% /u01

2.9.2、创建目录

mkdir -p /u01/app/21.3.0/grid

mkdir -p /u01/app/grid

mkdir -p /u01/app/oracle

mkdir -p /u01/app/oracle/product/21.3.0/dbhome_1

chown -R grid:oinstall /u01

chown -R oracle:oinstall /u01/app/oracle

chmod -R 775 /u01/

mkdir -p /u01/app/oraInventory

chown -R grid:oinstall /u01/app/oraInventory

chmod -R 775 /u01/app/oraInventory

2.10、配置grid和Oracle用户的环境变量文件

oracle用户:

cat >> /home/oracle/.bash_profile <<“EOF”

umask 022

export ORACLE_SID=rac21c1

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/21.3.0/dbhome_1

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export NLS_DATE_FORMAT=”YYYY-MM-DD HH24:MI:SS”

export TMP=/tmp

export TMPDIR=$TMP

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

export EDITOR=vi

export TNS_ADMIN=$ORACLE_HOME/network/admin

export ORACLE_PATH=.:$ORACLE_BASE/dba_scripts/sql:$ORACLE_HOME/rdbms/admin

export SQLPATH=$ORACLE_HOME/sqlplus/admin

#export NLS_LANG=”SIMPLIFIED CHINESE_CHINA.ZHS16GBK” –AL32UTF8 SELECT userenv(‘LANGUAGE’) db_NLS_LANG FROM DUAL;

export NLS_LANG=”AMERICAN_CHINA.ZHS16GBK”

alias sqlplus=’rlwrap sqlplus’

alias rman=’rlwrap rman’

alias asmcmd=’rlwrap asmcmd’

alias dgmgrl=’rlwrap dgmgrl’

alias sas=’sqlplus / as sysdba’

EOF

cat >> /home/grid/.bash_profile <<“EOF”

umask 022

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/21.3.0/grid

export LD_LIBRARY_PATH=$ORACLE_HOME/lib

export NLS_DATE_FORMAT=”YYYY-MM-DD HH24:MI:SS”

export PATH=$ORACLE_HOME/bin:$PATH

alias sqlplus=’rlwrap sqlplus’

alias asmcmd=’rlwrap asmcmd’

alias dgmgrl=’rlwrap dgmgrl’

alias sas=’sqlplus / as sysdba’

EOF

注意:另外一台数据库实例名须做相应修改:

Oracle:export ORACLE_SID=rac21c2

grid:export ORACLE_SID=+ASM2

2.11、配置root用户的环境变量

cat >> /etc/profile <<“EOF”

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/21.3.0/grid

export GRID_BASE=$ORACLE_BASE

export GRID_HOME=$ORACLE_HOME

export PATH=$PATH:$ORACLE_HOME/bin

EOF

2.12、安装软件依赖包

安装一些常用的包:

yum install -y openssh-clients openssh-server initscripts net-tools telnet which wget \

passwd e4fsprogs lrzsz sudo unzip lvm2 tree traceroute lsof file tar systemd \

bridge-utils mlocate mailx strace less mmv stress

yum install -y dos2unix rlwrap xdpyinfo xorg-x11-apps nmap numactl numactl-devel \

iproute rsyslog bash-completion tmux sysbench vim redhat-lsb smartmontools xinetd \

gcc make sysstat ksh binutils socat cmake automake autoconf bzr bison libtool deltarpm \

rsync libev pv subversion nload gnuplot jq oniguruma yum-fastestmirror net-snmp net-snmp-utils \

nfs-utils rpcbind postfix dovecot bind-utils bind bind-chroot dnsmasq haproxy keepalived bzr \

fio bzip2 ntp flex lftp

yum install -y ncurses-devel libgcrypt-devel libaio libaio-devel \

perl perl-Env perl-devel perl-Time-HiRes perl-DBD-MySQL perl-ExtUtils-MakeMaker perl-TermReadKey \

perl-Config-Tiny perl-Email-Date-Format perl-Log-Dispatch perl-Mail-Sender perl-Mail-Sendmail \

perl-MIME-Lite perl-Parallel-ForkManager perl-Digest-MD5 perl-ExtUtils-CBuilder perl-IO-Socket-SSL \

perl-JSON openssl-devel libverto-devel libsepol-devel libselinux-devel libkadm5 keyutils-libs-devel \

krb5-devel libcom_err-devel cyrus-sasl* perl-DBD-Pg perf slang perl-DBI perl-CPAN \

perl-ExtUtils-eBuilder cpan perl-tests

yum install -y compat-libstdc++-33 gcc-c++ glibc glibc-common glibc.i686 glibc-devel glibc-devel.i686 \

libgcc libgcc.i686 libstdc++ libstdc++-devel libaio.i686 libaio-devel.i686 \

libXext libXext.i686 libXtst libXtst.i686 libX11 libX11.i686 libXau libXau.i686 \

libxcb libxcb.i686 libXi libXi.i686 unixODBC unixODBC-devel zlib-devel zlib-devel.i686 \

compat-libcap1 libXp libXp-devel libXp.i686 elfutils-libelf elfutils-libelf-devel compat-db \

gnome-libs pdksh xscreensaver fontconfig-devel libXrender-devel

yum remove PackageKit -y

检查:

rpm -q –qf ‘%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n’ binutils \

compat-libcap1 \

compat-libstdc++-33 \

gcc \

gcc-c++ \

glibc \

glibc-devel \

ksh \

libgcc \

libstdc++ \

libstdc++-devel \

libaio \

libaio-devel \

libXext \

libXtst \

libX11 \

libXau \

libxcb \

libXi \

make \

elfutils-libelf-devel \

sysstat | grep “not installed”

2.13、配置内核参数

2.13.1、修改/etc/sysctl.conf文件

cat >> /etc/sysctl.conf <<“EOF”

vm.swappiness = 1

vm.dirty_background_ratio = 3

vm.dirty_ratio = 80

vm.dirty_expire_centisecs = 500

vm.dirty_writeback_centisecs = 100

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range=900065500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max=1048576

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.panic_on_oops = 1

kernel.watchdog_thresh=30

EOF

生效:

/sbin/sysctl -p

2.13.2、修改/etc/security/limits.conf文件

echo “grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

grid soft nproc 2047

grid hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft nproc 2047

oracle hard nproc 16384

oracle hard memlock 8145728

oracle soft memlock 8145728

root soft nproc 2047 ” >> /etc/security/limits.conf

2.13.3、修改/etc/pam.d/login文件

echo “session required pam_limits.so” >> /etc/pam.d/login

2.13.4、修改/etc/profile文件

cat >> /etc/profile << “EOF”

if [ $USER = “oracle” ] || [ $USER = “grid” ]; then

if [ $SHELL = “/bin/ksh” ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

EOF

2.14、关闭NTP和chrony服务

可以采用操作系统的NTP服务,也可以使用Oracle自带的服务ctss,如果ntp没有启用,那么Oracle会自动启用自己的ctssd进程。

从oracle 11gR2 RAC开始使用Cluster Time Synchronization Service(CTSS)同步各节点的时间,当安装程序发现NTP协议处于非活动状态时,安装集群时间同步服务将以活动模式(active)自动进行安装并同步所有节点的时间。如果发现配置了NTP,则以观察者模式(observer mode)启动集群时间同步服务,Oracle Clusterware不会在集群中进行活动的时间同步。

systemctl stopntpd

systemctl disable ntpd.service

mv /etc/ntp.conf /etc/ntp.conf.bak

Chrony是一个开源的自由软件,它能帮助你保持系统时钟与时钟服务器(NTP)同步,因此让你的时间保持精确。它由两个程序组成,分别是chronyd和chronyc。chronyd是一个后台运行的守护进程,用于调整内核中运行的系统时钟和时钟服务器同步。它确定计算机增减时间的比率,并对此进行补偿。chronyc提供了一个用户界面,用于监控性能并进行多样化的配置。它可以在chronyd实例控制的计算机上工作,也可以在一台不同的远程计算机上工作。

systemctl disable chronyd

systemctl stop chronyd

mv /etc/chrony.conf /etc/chrony.conf_bak

2.15、关闭avahi-daemon

systemctl stop avahi-daemon

systemctl disable avahi-daemon

Avahi允许程序在不需要进行手动网络配置的情况 下,在一个本地网络中发布和获知各种服务和主机。例如,当某用户把他的计算机接入到某个局域网时,如果他的机器运行有Avahi服务,则Avahi程式自动广播,从而发现网络中可用的打印机、共享文件和可相互聊天的其他用户。这有点象他正在接收局域网中的各种网络广告一样。

Linux下系统实际启动的进程名,是avahi-daemon。

2.16、将NOZEROCONF=yes添加到/etc/sysconfig/network文件中

echo ‘NOZEROCONF=yes’ >> /etc/sysconfig/network

2.17、禁用透明大页

参考:https://www.xmmup.com/linux-biaozhundayehetoumingdaye.html

cat >> /etc/rc.local <<“EOF”

if test -f /sys/kernel/mm/transparent_hugepage/enabled; then

echo never > /sys/kernel/mm/transparent_hugepage/enabled

fi

if test -f /sys/kernel/mm/transparent_hugepage/defrag; then

echo never > /sys/kernel/mm/transparent_hugepage/defrag

fi

EOF

chmod +x /etc/rc.d/rc.local

sh /etc/rc.local

cat /sys/kernel/mm/transparent_hugepage/defrag

cat /sys/kernel/mm/transparent_hugepage/enabled

建议配置大页功能,参考:https://www.xmmup.com/oracleshujukupeizhidaye.html

2.18、停止不用的服务

systemctl list-unit-files | grep enable

systemctl stop autofs

systemctl stop nfslock

systemctl stop rpcidmapd

systemctl stop rpcgssd

systemctl stop ntpd

systemctl stop bluetooth.service

systemctl stop cups.path

systemctl stop cups.socket

systemctl stop postfix.service

systemctl stop rpcbind.service

systemctl stop rpcbind.socket

systemctl stop NetworkManager-dispatcher.service

systemctl stop dbus-org.freedesktop.NetworkManager.service

systemctl stop abrt-ccpp.service

systemctl stop cups.service

systemctl stop libvirtd

systemctl disable autofs

systemctl disable nfslock

systemctl disable rpcidmapd

systemctl disable rpcgssd

systemctl disable ntpd

systemctl disable bluetooth.service

systemctl disable cups.path

systemctl disable cups.socket

systemctl disable postfix.service

systemctl disable rpcbind.service

systemctl disable rpcbind.socket

systemctl disable NetworkManager-dispatcher.service

systemctl disable dbus-org.freedesktop.NetworkManager.service

systemctl disable abrt-ccpp.service

systemctl disable cups.service

systemctl disable libvirtd

2.19、提升开关机速度

在centos在关机或开机时一直等待,等待时间久,卡住时间久,提示:A stop job is running for ……

解决:

cat >> /etc/systemd/system.conf << “EOF”

DefaultTimeoutStartSec=5s

DefaultTimeoutStopSec=5s

EOF

systemctl daemon-reload

三、配置共享存储

这个是重点,也是最容易出错的地方。共享存储可以使用第三方软件提供的方式来共享(例如openfiler),也可以使用 WMware Workstation软件进行存储共享,也可以使用ISCSI网络存储服务来配置共享存储。

我们本次使用ISCSI网络存储服务+udev的方式进行模拟共享存储,将节点2上的多出的磁盘作为共享存储。

3.1、服务端配置

这里的服务端即节点2。

3.1.1、创建LVM

在节点2执行:

lvcreate -L 1g -n lv_ocr1 vg_oracle

lvcreate -L 1g -n lv_ocr2 vg_oracle

lvcreate -L 1g -n lv_ocr3 vg_oracle

lvcreate -L 10g -n lv_mgmt1 vg_oracle

lvcreate -L 10g -n lv_mgmt2 vg_oracle

lvcreate -L 10g -n lv_mgmt3 vg_oracle

lvcreate -L 15g -n lv_data1 vg_oracle

lvcreate -L 15g -n lv_data2 vg_oracle

lvcreate -L 15g -n lv_data3 vg_oracle

lvcreate -L 10g -n lv_fra1 vg_oracle

lvcreate -L 10g -n lv_fra2 vg_oracle

lvcreate -L 10g -n lv_fra3 vg_oracle

3.1.2、使用yum安装targetd和targetcli

在节点2执行:

yum -y install targetd targetcli

systemctl start target

systemctl enable target

systemctl status target

systemctl list-unit-files|grep target.service

同时确认targetd服务启动状态和开机启动。

3.1.3、使用targetcli创建设备

targetcli进入命令行,cd到/backstores/block目录下,创建设备:

targetcli

ls

cd /backstores/block

create ocr1 /dev/vg_oracle/lv_ocr1

create ocr2 /dev/vg_oracle/lv_ocr2

create ocr3 /dev/vg_oracle/lv_ocr3

create mgmt1 /dev/vg_oracle/lv_mgmt1

create mgmt2 /dev/vg_oracle/lv_mgmt2

create mgmt3 /dev/vg_oracle/lv_mgmt3

create data1 /dev/vg_oracle/lv_data1

create data2 /dev/vg_oracle/lv_data2

create data3 /dev/vg_oracle/lv_data3

create fra1 /dev/vg_oracle/lv_fra1

create fra2 /dev/vg_oracle/lv_fra2

create fra3 /dev/vg_oracle/lv_fra3

执行过程:

[root@raclhr-21c-n2 ~]# targetcli

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.53

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type ‘help’.

/> ls

o- / …………………………………………………………………………………………………………. […]

o- backstores ……………………………………………………………………………………………….. […]

| o- block …………………………………………………………………………………….. [Storage Objects: 0]

| o- fileio ……………………………………………………………………………………. [Storage Objects: 0]

| o- pscsi …………………………………………………………………………………….. [Storage Objects: 0]

| o- ramdisk …………………………………………………………………………………… [Storage Objects: 0]

o- iscsi ……………………………………………………………………………………………… [Targets: 0]

o- loopback …………………………………………………………………………………………… [Targets: 0]

/> cd /backstores/block

/backstores/block>

/backstores/block> create ocr1 /dev/vg_oracle/lv_ocr1

Created block storage object ocr1 using /dev/vg_oracle/lv_ocr1.

/backstores/block>

/backstores/block> create ocr2 /dev/vg_oracle/lv_ocr2

create ocr3 /dev/vg_oracle/lv_ocr3

Created block storage object ocr2 using /dev/vg_oracle/lv_ocr2.

/backstores/block> create ocr3 /dev/vg_oracle/lv_ocr3

create mgmt1 /dev/vg_oracle/lv_mgmt1

create mgmt2 /dev/vg_oracle/lv_mgmt2

Created block storage object ocr3 using /dev/vg_oracle/lv_ocr3.

/backstores/block>

/backstores/block> create mgmt1 /dev/vg_oracle/lv_mgmt1

Created block storage object mgmt1 using /dev/vg_oracle/lv_mgmt1.

/backstores/block> create mgmt2 /dev/vg_oracle/lv_mgmt2

create mgmt3 /dev/vg_oracle/lv_mgmt3

Created block storage object mgmt2 using /dev/vg_oracle/lv_mgmt2.

/backstores/block> create mgmt3 /dev/vg_oracle/lv_mgmt3

Created block storage object mgmt3 using /dev/vg_oracle/lv_mgmt3.

/backstores/block>

/backstores/block> create data1 /dev/vg_oracle/lv_data1

Created block storage object data1 using /dev/vg_oracle/lv_data1.

/backstores/block> create data2 /dev/vg_oracle/lv_data2

Created block storage object data2 using /dev/vg_oracle/lv_data2.

/backstores/block> create data3 /dev/vg_oracle/lv_data3

Created block storage object data3 using /dev/vg_oracle/lv_data3.

/backstores/block>

/backstores/block> create fra1 /dev/vg_oracle/lv_fra1

Created block storage object fra1 using /dev/vg_oracle/lv_fra1.

/backstores/block> create fra2 /dev/vg_oracle/lv_fra2

Created block storage object fra2 using /dev/vg_oracle/lv_fra2.

/backstores/block> create fra3 /dev/vg_oracle/lv_fra3

Created block storage object fra3 using /dev/vg_oracle/lv_fra3.

/backstores/block> ls

o- block ……………………………………………………………………………………….. [Storage Objects: 12]

o- data1 …………………………………………………….. [/dev/vg_oracle/lv_data1 (15.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- data2 …………………………………………………….. [/dev/vg_oracle/lv_data2 (15.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- data3 …………………………………………………….. [/dev/vg_oracle/lv_data3 (15.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- fra1 ………………………………………………………. [/dev/vg_oracle/lv_fra1 (10.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- fra2 ………………………………………………………. [/dev/vg_oracle/lv_fra2 (10.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- fra3 ………………………………………………………. [/dev/vg_oracle/lv_fra3 (10.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- mgmt1 …………………………………………………….. [/dev/vg_oracle/lv_mgmt1 (10.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- mgmt2 …………………………………………………….. [/dev/vg_oracle/lv_mgmt2 (10.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- mgmt3 …………………………………………………….. [/dev/vg_oracle/lv_mgmt3 (10.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- ocr1 ……………………………………………………….. [/dev/vg_oracle/lv_ocr1 (1.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- ocr2 ……………………………………………………….. [/dev/vg_oracle/lv_ocr2 (1.0GiB) write-thru deactivated]

| o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

| o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

o- ocr3 ……………………………………………………….. [/dev/vg_oracle/lv_ocr3 (1.0GiB) write-thru deactivated]

o- alua …………………………………………………………………………………………. [ALUA Groups: 1]

o- default_tg_pt_gp ………………………………………………………………… [ALUA state: Active/optimized]

3.1.4、使用targetcli创建iqn和LUN

cd到/iscsi目录下,创建iqn;cd到/iscsi/iqn.20…0be/tpg1/luns下创建LUN,参考命令如下:

cd /iscsi

create iqn.2021-08.xmmup.com:rac-21c-shared-disks

cd /iscsi/iqn.2021-08.xmmup.com:rac-21c-shared-disks/tpg1/luns

create /backstores/block/ocr1

create /backstores/block/ocr2

create /backstores/block/ocr3

create /backstores/block/mgmt1

create /backstores/block/mgmt2

create /backstores/block/mgmt3

create /backstores/block/data1

create /backstores/block/data2

create /backstores/block/data3

create /backstores/block/fra1

create /backstores/block/fra2

create /backstores/block/fra3

执行过程:

/> cd /iscsi

/iscsi> create iqn.2021-08.xmmup.com:rac-21c-shared-disks

Created target iqn.2021-08.xmmup.com:rac-21c-shared-disks.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/iscsi> ls

o- iscsi ……………………………………………………………………………………………….. [Targets: 1]

o- iqn.2021-08.xmmup.com:rac-21c-shared-disks ……………………………………………………………….. [TPGs: 1]

o- tpg1 ……………………………………………………………………………………. [no-gen-acls, no-auth]

o- acls ……………………………………………………………………………………………… [ACLs: 0]

o- luns ……………………………………………………………………………………………… [LUNs: 0]

o- portals ………………………………………………………………………………………… [Portals: 1]

o- 0.0.0.0:3260 …………………………………………………………………………………………. [OK]

/iscsi> cd /iscsi/iqn.2021-08.xmmup.com:rac-21c-shared-disks/tpg1/luns

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/ocr1

Created LUN 0.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/ocr2

Created LUN 1.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/ocr3

Created LUN 2.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/mgmt1

Created LUN 3.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/mgmt2

Created LUN 4.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/mgmt3

Created LUN 5.

create /backstores/block/data1

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/data1

Created LUN 6.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/data2

Created LUN 7.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/data3

Created LUN 8.

create /backstores/block/fra1

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/fra1

Created LUN 9.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/fra2

Created LUN 10.

/iscsi/iqn.20…sks/tpg1/luns> create /backstores/block/fra3

Created LUN 11.

/iscsi/iqn.20…sks/tpg1/luns> ls

o- luns ………………………………………………………………………………………………….. [LUNs: 12]

o- lun0 ……………………………………………………….. [block/ocr1 (/dev/vg_oracle/lv_ocr1) (default_tg_pt_gp)]

o- lun1 ……………………………………………………….. [block/ocr2 (/dev/vg_oracle/lv_ocr2) (default_tg_pt_gp)]

o- lun2 ……………………………………………………….. [block/ocr3 (/dev/vg_oracle/lv_ocr3) (default_tg_pt_gp)]

o- lun3 ……………………………………………………… [block/mgmt1 (/dev/vg_oracle/lv_mgmt1) (default_tg_pt_gp)]

o- lun4 ……………………………………………………… [block/mgmt2 (/dev/vg_oracle/lv_mgmt2) (default_tg_pt_gp)]

o- lun5 ……………………………………………………… [block/mgmt3 (/dev/vg_oracle/lv_mgmt3) (default_tg_pt_gp)]

o- lun6 ……………………………………………………… [block/data1 (/dev/vg_oracle/lv_data1) (default_tg_pt_gp)]

o- lun7 ……………………………………………………… [block/data2 (/dev/vg_oracle/lv_data2) (default_tg_pt_gp)]

o- lun8 ……………………………………………………… [block/data3 (/dev/vg_oracle/lv_data3) (default_tg_pt_gp)]

o- lun9 ……………………………………………………….. [block/fra1 (/dev/vg_oracle/lv_fra1) (default_tg_pt_gp)]

o- lun10 ………………………………………………………. [block/fra2 (/dev/vg_oracle/lv_fra2) (default_tg_pt_gp)]

o- lun11 ………………………………………………………. [block/fra3 (/dev/vg_oracle/lv_fra3) (default_tg_pt_gp)]

3.1.5、使用targetcli创建acls

设置哪些设备可以连接到此iqn上:

cd到acls目录下,创建2个client的acls,参考命令如下:

cd /iscsi/iqn.2021-08.xmmup.com:rac-21c-shared-disks/tpg1/acls

create iqn.2021-08.xmmup.com:rac-21c-shared-disks:client62

create iqn.2021-08.xmmup.com:rac-21c-shared-disks:client63

执行:

/iscsi/iqn.20…sks/tpg1/luns> cd /iscsi/iqn.2021-08.xmmup.com:rac-21c-shared-disks/tpg1/acls

/iscsi/iqn.20…sks/tpg1/acls> ls

o- acls …………………………………………………………………………………………………… [ACLs: 0]

/iscsi/iqn.20…sks/tpg1/acls> create iqn.2021-08.xmmup.com:rac-21c-shared-disks:client62

Created Node ACL for iqn.2021-08.xmmup.com:rac-21c-shared-disks:client62

Created mapped LUN 0.

Created mapped LUN 1.

Created mapped LUN 2.

Created mapped LUN 3.

Created mapped LUN 4.

Created mapped LUN 5.

Created mapped LUN 6.

Created mapped LUN 7.

Created mapped LUN 8.

Created mapped LUN 9.

Created mapped LUN 10.

Created mapped LUN 11.

/iscsi/iqn.20…sks/tpg1/acls> create iqn.2021-08.xmmup.com:rac-21c-shared-disks:client63

Created Node ACL for iqn.2021-08.xmmup.com:rac-21c-shared-disks:client63

Created mapped LUN 0.

Created mapped LUN 1.

Created mapped LUN 2.

Created mapped LUN 3.

Created mapped LUN 4.

Created mapped LUN 5.

Created mapped LUN 6.

Created mapped LUN 7.

Created mapped LUN 8.

Created mapped LUN 9.

Created mapped LUN 10.

Created mapped LUN 11.

/iscsi/iqn.20…sks/tpg1/acls> ls

o- acls …………………………………………………………………………………………………… [ACLs: 2]

o- iqn.2021-08.xmmup.com:rac-21c-shared-disks:client62 ………………………………………………… [Mapped LUNs: 12]

| o- mapped_lun0 ……………………………………………………………………………… [lun0 block/ocr1 (rw)]

| o- mapped_lun1 ……………………………………………………………………………… [lun1 block/ocr2 (rw)]

| o- mapped_lun2 ……………………………………………………………………………… [lun2 block/ocr3 (rw)]

| o- mapped_lun3 …………………………………………………………………………….. [lun3 block/mgmt1 (rw)]

| o- mapped_lun4 …………………………………………………………………………….. [lun4 block/mgmt2 (rw)]

| o- mapped_lun5 …………………………………………………………………………….. [lun5 block/mgmt3 (rw)]

| o- mapped_lun6 …………………………………………………………………………….. [lun6 block/data1 (rw)]

| o- mapped_lun7 …………………………………………………………………………….. [lun7 block/data2 (rw)]

| o- mapped_lun8 …………………………………………………………………………….. [lun8 block/data3 (rw)]

| o- mapped_lun9 ……………………………………………………………………………… [lun9 block/fra1 (rw)]

| o- mapped_lun10 ……………………………………………………………………………. [lun10 block/fra2 (rw)]

| o- mapped_lun11 ……………………………………………………………………………. [lun11 block/fra3 (rw)]

o- iqn.2021-08.xmmup.com:rac-21c-shared-disks:client63 ………………………………………………… [Mapped LUNs: 12]

o- mapped_lun0 ……………………………………………………………………………… [lun0 block/ocr1 (rw)]

o- mapped_lun1 ……………………………………………………………………………… [lun1 block/ocr2 (rw)]

o- mapped_lun2 ……………………………………………………………………………… [lun2 block/ocr3 (rw)]

o- mapped_lun3 …………………………………………………………………………….. [lun3 block/mgmt1 (rw)]

o- mapped_lun4 …………………………………………………………………………….. [lun4 block/mgmt2 (rw)]

o- mapped_lun5 …………………………………………………………………………….. [lun5 block/mgmt3 (rw)]

o- mapped_lun6 …………………………………………………………………………….. [lun6 block/data1 (rw)]

o- mapped_lun7 …………………………………………………………………………….. [lun7 block/data2 (rw)]

o- mapped_lun8 …………………………………………………………………………….. [lun8 block/data3 (rw)]

o- mapped_lun9 ……………………………………………………………………………… [lun9 block/fra1 (rw)]

o- mapped_lun10 ……………………………………………………………………………. [lun10 block/fra2 (rw)]

o- mapped_lun11 ……………………………………………………………………………. [lun11 block/fra3 (rw)]

3.1.6、配置target监听IP和端口

cd到portals目录下创建portals:

cd /iscsi/iqn.2021-08.xmmup.com:rac-21c-shared-disks/tpg1/portals

delete 0.0.0.0 3260

create 192.168.59.63

执行过程:

/iscsi/iqn.20…sks/tpg1/acls> cd /iscsi/iqn.2021-08.xmmup.com:rac-21c-shared-disks/tpg1/portals

/iscsi/iqn.20…/tpg1/portals> ls

o- portals ……………………………………………………………………………………………… [Portals: 1]

o- 0.0.0.0:3260 ………………………………………………………………………………………………. [OK]

/iscsi/iqn.20…/tpg1/portals> delete 0.0.0.0 3260

Deleted network portal 0.0.0.0:3260

/iscsi/iqn.20…/tpg1/portals> create 192.168.59.63

Using default IP port 3260

Created network portal 192.168.59.63:3260.

/iscsi/iqn.20…/tpg1/portals> ls

o- portals ……………………………………………………………………………………………… [Portals: 2]

o- 192.168.59.63:3260 …………………………………………………………………………………………. [OK]

/iscsi/iqn.20…/tpg1/portals>

3.1.7、保存配置

cd /

saveconfig

说明:可以查看/etc/target/saveconfig.json配置文件,该配置文件保存着ISCSI的配置。

/iscsi/iqn.20…/tpg1/portals> cd /

/> saveconfig

Configuration saved to /etc/target/saveconfig.json

/>

3.2、客户端配置

客户端即节点1和节点2都需要配置。

3.2.1、安装服务并启动

yum -y install iscsi-initiator-utils

— 注意节点2的不同,应该为“client63”

echo “InitiatorName=iqn.2021-08.xmmup.com:rac-21c-shared-disks:client62” > /etc/iscsi/initiatorname.iscsi

systemctl enable iscsid

systemctl start iscsid

systemctl status iscsid

— 修改文件/usr/lib/systemd/system/iscsi.service,在“[Service]”下添加如下内容:

TimeoutStartSec=5sec

TimeoutStopSec=5sec

systemctl daemon-reload

3.2.2、使用iscsiadm发现可用存储设备并登陆连接

— 找到可用存储设备:

iscsiadm -m discovery -t st -p 192.168.59.63

— 登陆连接:

iscsiadm -m node -T iqn.2021-08.xmmup.com:rac-21c-shared-disks -p 192.168.59.63 –login

— 若是重新配置,则删除以下内容,并重启OS才能生效

rm -rf /var/lib/iscsi/nodes/*

rm -rf /var/lib/iscsi/send_targets/*

执行过程:

[root@raclhr-21c-n1 ~]# iscsiadm -m discovery -t st -p 192.168.59.63

192.168.59.63:3260,1 iqn.2021-08.xmmup.com:rac-21c-shared-disks

[root@raclhr-21c-n1 ~]# iscsiadm -m node -T iqn.2021-08.xmmup.com:rac-21c-shared-disks -p 192.168.59.63 –login

Logging in to [iface: default, target: iqn.2021-08.xmmup.com:rac-21c-shared-disks, portal: 192.168.59.63,3260] (multiple)

Login to [iface: default, target: iqn.2021-08.xmmup.com:rac-21c-shared-disks, portal: 192.168.59.63,3260] successful.

此时使用fdisk -l | grep dev查看已经正常显示所有设备:

[root@raclhr-21c-n1 ~]# fdisk -l | grep dev

….

Disk /dev/sdd: 1073 MB, 1073741824 bytes, 2097152 sectors

Disk /dev/sde: 1073 MB, 1073741824 bytes, 2097152 sectors

Disk /dev/sdf: 1073 MB, 1073741824 bytes, 2097152 sectors

Disk /dev/sdg: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdh: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdi: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdj: 16.1 GB, 16106127360 bytes, 31457280 sectors

Disk /dev/sdk: 16.1 GB, 16106127360 bytes, 31457280 sectors

Disk /dev/sdl: 16.1 GB, 16106127360 bytes, 31457280 sectors

Disk /dev/sdm: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdn: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdo: 10.7 GB, 10737418240 bytes, 20971520 sectors

3.2.3、创建并配置udev rules文件

直接运行如下的脚本:

— 创建ASM磁盘udev配置文件shell脚本并运行

for i in c d e f; do

echo “KERNEL==\”sd*\”,ENV{DEVTYPE}==\”disk\”,SUBSYSTEM==\”block\”,PROGRAM==\”/usr/lib/udev/scsi_id -g -u -d \$devnode\”,RESULT==\”`/usr/lib/udev/scsi_id -g -u /dev/sd$i`\”, RUN+=\”/bin/sh -c ‘mknod /dev/asm-disk$i b \$major \$minor; chown grid:asmadmin /dev/asm-disk$i; chmod 0660 /dev/asm-disk$i’\”” >> /etc/udev/rules.d/99-oracle-asmdevices.rules

done

— 重启服务:

/sbin/udevadm trigger –type=devices –action=change

/sbin/udevadm control –reload

— 查看磁盘

ll /dev/asm*

执行过程:

[root@raclhr-21c-n1 ~]# for i in defghijklmno;do

> echo “KERNEL==\”sd*\”,ENV{DEVTYPE}==\”disk\”,SUBSYSTEM==\”block\”,PROGRAM==\”/usr/lib/udev/scsi_id -g -u -d \$devnode\”,RESULT==\”<code>/usr/lib/udev/scsi_id -g -u /dev/sd$i</code>\”, RUN+=\”/bin/sh -c ‘mknod /dev/asm-disk$i b \$major \$minor; chown grid:asmadmin /dev/asm-disk$i; chmod 0660 /dev/asm-disk$i’\”” >> /etc/udev/rules.d/99-oracle-asmdevices.rules

> done

[root@raclhr-21c-n1 ~]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”360014054269a99f369e49f3b96c36a1f”, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskd b $major $minor; chown grid:asmadmin /dev/asm-diskd; chmod 0660 /dev/asm-diskd'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”360014050e1a3ca0338b4ec98c3fd3549″, RUN+=”/bin/sh -c ‘mknod /dev/asm-diske b $major $minor; chown grid:asmadmin /dev/asm-diske; chmod 0660 /dev/asm-diske'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”360014059475c72da0e44253b4377a355″, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskf b $major $minor; chown grid:asmadmin /dev/asm-diskf; chmod 0660 /dev/asm-diskf'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”36001405cf669852f98240dd9596e1808″, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskg b $major $minor; chown grid:asmadmin /dev/asm-diskg; chmod 0660 /dev/asm-diskg'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”36001405120d8ce03eb94720ac9ffa91a”, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskh b $major $minor; chown grid:asmadmin /dev/asm-diskh; chmod 0660 /dev/asm-diskh'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”36001405095565b2b64b4085bf3b13184″, RUN+=”/bin/sh -c ‘mknod /dev/asm-diski b $major $minor; chown grid:asmadmin /dev/asm-diski; chmod 0660 /dev/asm-diski'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”36001405647c901386824b27aceedf72f”, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskj b $major $minor; chown grid:asmadmin /dev/asm-diskj; chmod 0660 /dev/asm-diskj'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”36001405030340160f0b4393becae3f60″, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskk b $major $minor; chown grid:asmadmin /dev/asm-diskk; chmod 0660 /dev/asm-diskk'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”36001405ef600c31b0034c41ac16391ea”, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskl b $major $minor; chown grid:asmadmin /dev/asm-diskl; chmod 0660 /dev/asm-diskl'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”36001405f671bb9a383e4808ad158b573″, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskm b $major $minor; chown grid:asmadmin /dev/asm-diskm; chmod 0660 /dev/asm-diskm'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”360014053294b9b4056841baa79a34d67″, RUN+=”/bin/sh -c ‘mknod /dev/asm-diskn b $major $minor; chown grid:asmadmin /dev/asm-diskn; chmod 0660 /dev/asm-diskn'”

KERNEL==”sd*”,ENV{DEVTYPE}==”disk”,SUBSYSTEM==”block”,PROGRAM==”/usr/lib/udev/scsi_id -g -u -d $devnode”,RESULT==”36001405b219421f2408475f8b8183eb1″, RUN+=”/bin/sh -c ‘mknod /dev/asm-disko b $major $minor; chown grid:asmadmin /dev/asm-disko; chmod 0660 /dev/asm-disko'”

[root@raclhr-21c-n1 ~]# ll /dev/asm*

ls: cannot access /dev/asm*: No such file or directory

[root@raclhr-21c-n1 ~]# /sbin/udevadm trigger –type=devices –action=change

[root@raclhr-21c-n1 ~]# /sbin/udevadm control –reload

[root@raclhr-21c-n1 ~]# ll /dev/asm*

ls: cannot access /dev/asm*: No such file or directory

[root@raclhr-21c-n1 ~]# ll /dev/asm*

brw-rw—- 1 grid asmadmin 8, 48 Aug 19 15:19 /dev/asm-diskd

brw-rw—- 1 grid asmadmin 8, 64 Aug 19 15:19 /dev/asm-diske

brw-rw—- 1 grid asmadmin 8, 80 Aug 19 15:19 /dev/asm-diskf

brw-rw—- 1 grid asmadmin 8, 96 Aug 19 15:19 /dev/asm-diskg

brw-rw—- 1 grid asmadmin 8, 112 Aug 19 15:19 /dev/asm-diskh

brw-rw—- 1 grid asmadmin 8, 128 Aug 19 15:19 /dev/asm-diski

brw-rw—- 1 grid asmadmin 8, 144 Aug 19 15:19 /dev/asm-diskj

brw-rw—- 1 grid asmadmin 8, 160 Aug 19 15:19 /dev/asm-diskk

brw-rw—- 1 grid asmadmin 8, 176 Aug 19 15:19 /dev/asm-diskl

brw-rw—- 1 grid asmadmin 8, 192 Aug 19 15:19 /dev/asm-diskm

brw-rw—- 1 grid asmadmin 8, 208 Aug 19 15:19 /dev/asm-diskn

brw-rw—- 1 grid asmadmin 8, 224 Aug 19 15:19 /dev/asm-disko

[root@raclhr-21c-n1 ~]# lsscsi

[0:0:0:0] disk VMware, VMware Virtual S 1.0 /dev/sda

[0:0:1:0] disk VMware, VMware Virtual S 1.0 /dev/sdb

[0:0:2:0] disk VMware, VMware Virtual S 1.0 /dev/sdc

[3:0:0:0] disk LIO-ORG ocr1 4.0 /dev/asm-diskd

[3:0:0:1] disk LIO-ORG ocr2 4.0 /dev/asm-diske

[3:0:0:2] disk LIO-ORG ocr3 4.0 /dev/asm-diskf

[3:0:0:3] disk LIO-ORG mgmt1 4.0 /dev/asm-diskg

[3:0:0:4] disk LIO-ORG mgmt2 4.0 /dev/asm-diskh

[3:0:0:5] disk LIO-ORG mgmt3 4.0 /dev/asm-diski

[3:0:0:6] disk LIO-ORG data1 4.0 /dev/asm-diskj

[3:0:0:7] disk LIO-ORG data2 4.0 /dev/asm-diskk

[3:0:0:8] disk LIO-ORG data3 4.0 /dev/asm-diskl

[3:0:0:9] disk LIO-ORG fra1 4.0 /dev/asm-diskm

[3:0:0:10] disk LIO-ORG fra2 4.0 /dev/asm-diskn

[3:0:0:11] disk LIO-ORG fra3 4.0 /dev/asm-disko

四、数据库软件包准备

4.1、上传安装软件

打开SecureFX或xshell ftp软件:将db-home和grid-home安装程序上传至/soft目录。

注意,对安装包需要进行MD5值校验:

[root@raclhr-21c-n1 ~]# cd /soft

[root@raclhr-21c-n1 soft]# ll

total 5401812

-rw-r–r– 1 root root 3109225519 Aug 16 09:58 LINUX.X64_213000_db_home.zip

-rw-r–r– 1 root root 2422217613 Aug 16 09:57 LINUX.X64_213000_grid_home.zip

[root@raclhr-21c-n1soft]#md5sumLINUX.X64_213000_grid_home.zip

b3fbdb7621ad82cbd4f40943effdd1be LINUX.X64_213000_grid_home.zip

[root@raclhr-21c-n1soft]#md5sumLINUX.X64_213000_db_home.zip

8ac915a800800ddf16a382506d3953db LINUX.X64_213000_db_home.zip

4.2、解压软件

grid用户:

unzip LINUX.X64_213000_grid_home.zip -d /u01/app/21.3.0/grid

Oracle用户:

unzip LINUX.X64_213000_db_home.zip -d /u01/app/oracle/product/21.3.0/dbhome_1

只在节点1上进行解压即可。

👉 注意:

1、不要同时进行解压2个文件,否则可能有未预料到的错误

2、需要搜索一下解压是否有报错的地方,搜索关键词“error”

注意:18c解压后的文件所在目录就是grid home。所以解压的时候,就要把文件解压到之前定的GRID_HOME下。在12c R2之前是安装的时候,软件会自动复制过去。

五、安装前预检查

5.1、安装补丁包(cvuqdisk)

在安装rac之前,经常会需要运行集群验证工具CVU(Cluster Verification Utility),该工具执行系统检查,确认当前的配置是否满足要求。

首先判断是否安装了cvuqdisk包:

rpm -qa cvuqdisk

如果没有安装,那么在2个节点上都执行如下命令进行安装该包:

export CVUQDISK_GRP=oinstall

cd /u01/app/21.3.0/grid/cv/rpm

rpm -ivh cvuqdisk-1.0.10-1.rpm

传输到第2个节点上进行安装:

scp cvuqdisk-1.0.10-1.rpm root@raclhr-21c-n2:/soft

— 节点2安装cvuqdisk包

export CVUQDISK_GRP=oinstall

rpm -ivh /soft/cvuqdisk-1.0.10-1.rpm

5.2、配SSH互信,建立ssh等效性

sshUserSetup.sh在GI安装介质解压缩后的sshsetup目录下。下面两条命令在节点1上执行即可,在root用户下执行:

/u01/app/21.3.0/grid/oui/prov/resources/scripts/sshUserSetup.sh -user grid -hosts “raclhr-21c-n1 raclhr-21c-n2” -advanced exverify -confirm

/u01/app/21.3.0/grid/oui/prov/resources/scripts/sshUserSetup.sh -user oracle -hosts “raclhr-21c-n1 raclhr-21c-n2” -advanced exverify -confirm

输入yes及密码一路回车即可。

以Oracle和grid用户在2个节点上都测试两节点连通性:

ssh raclhr-21c-n1 date

ssh raclhr-21c-n2 date

ssh raclhr-21c-n1-priv date

ssh raclhr-21c-n2-priv date

第二次执行时不再提示输入口令,并且可以成功执行命令,则表示SSH对等性配置成功。

5.3、cluster硬件检测–安装前预检查配置信息

Use Cluster Verification Utility (cvu)

Before installing Oracle Clusterware, use CVU to ensure that your cluster is prepared for an installation:

Oracle provides CVU to perform system checks in preparation for an installation, patch updates, or other system changes. In addition, CVU can generate fixup scripts that can change many kernel parameters to at lease the minimum settings required for a successful installation.

Using CVU can help system administrators, storage administrators, and DBA to ensure that everyone has completed the system configuration and preinstallation steps.

./runcluvfy.sh -help

./runcluvfy.sh stage -pre crsinst -n rac1,rac2 –fixup -verbose

Install the operating system package cvuqdisk to both Oracle RAC nodes. Without cvuqdisk, Cluster Verification Utility cannot discover shared disks, and you will receive the error message “Package cvuqdisk not installed” when the Cluster Verification Utility is run (either manually or at the end of the Oracle grid infrastructure installation). Use the cvuqdisk RPM for your hardware architecture (for example, x86_64 or i386). The cvuqdisk RPM can be found on the Oracle grid infrastructure installation media in the rpm directory. For the purpose of this article, the Oracle grid infrastructure media was extracted to the /home/grid/software/oracle/grid directory on racnode1 as the grid user.

在安装GRID之前,建议先利用CVU(Cluster Verification Utility)检查CRS的安装前环境。以grid用户运行:

su – grid

export CVUQDISK_GRP=oinstall

export CV_NODE_ALL=raclhr-21c-n1,raclhr-21c-n2

/u01/app/21.3.0/grid/runcluvfy.sh stage -pre crsinst -allnodes -fixup -verbose -method root

grid安装完成后还可以进行如下校验:

$ORACLE_HOME/bin/cluvfy stage -pre crsinst -n all -verbose -fixup

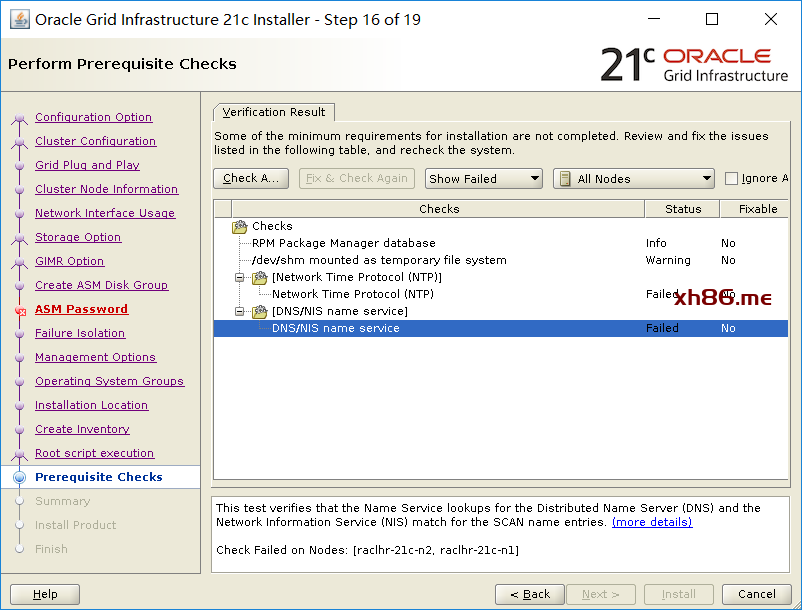

未检测通过的显示为failed,有的failed可以根据提供的脚本进行修复。有的需要根据情况进行修复,有的failed也可以忽略。

- 报错一:

/dev/shm mounted as temporary file system …FAILED

raclhr-21c-n2: PRVE-0421 : No entry exists in /etc/fstab for mounting /dev/shm

raclhr-21c-n1: PRVE-0421 : No entry exists in /etc/fstab for mounting /dev/shm

参考:http://blog.itpub.net/26736162/viewspace-2214381

CentOS7和RHEL7在 /etc/fstab中不包含/dev/shm ,可以手动加进去,或者忽略都可以。

- 报错二:

Systemd login manager IPC parameter …FAILED

raclhr-21c-n2: PRVE-10233 : Systemd login manager parameter ‘RemoveIPC’ entry

does not exist or is commented out in the configuration file

“/etc/systemd/logind.conf” on node “raclhr-21c-n2″.

[Expected=”no”]

raclhr-21c-n1: PRVE-10233 : Systemd login manager parameter ‘RemoveIPC’ entry

does not exist or is commented out in the configuration file

“/etc/systemd/logind.conf” on node “raclhr-21c-n1″.

[Expected=”no”]

参考:http://blog.itpub.net/29371470/viewspace-2125673/

解决:

echo “RemoveIPC=no” >> /etc/systemd/logind.conf

systemctl daemon-reload

systemctl restart systemd-logind

- 报错三:

Network Time Protocol (NTP) …FAILED

raclhr-21c-n2: PRVG-1017 : NTP configuration file “/etc/ntp.conf” is present on

nodes “raclhr-21c-n2,raclhr-21c-n1” on which NTP daemon or

service was not running

raclhr-21c-n1: PRVG-1017 : NTP configuration file “/etc/ntp.conf” is present on

nodes “raclhr-21c-n2,raclhr-21c-n1” on which NTP daemon or

service was not running

我们使用ctssd来同步集群的时间,所以ntp必须关闭。

六、图形界面安装集群和db

安装之前重启一次OS,并检查网络和共享盘是否正确。

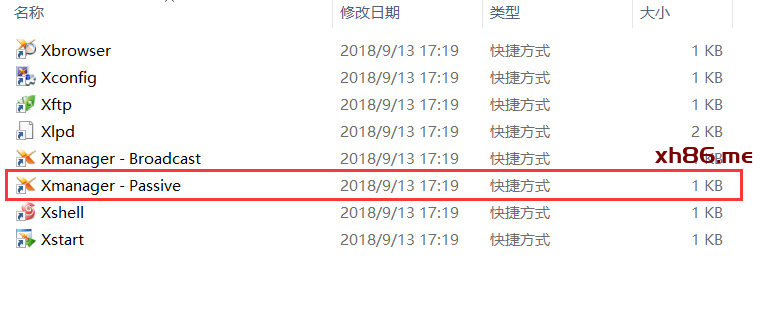

首先,打开Xmanager – Passive,如下:

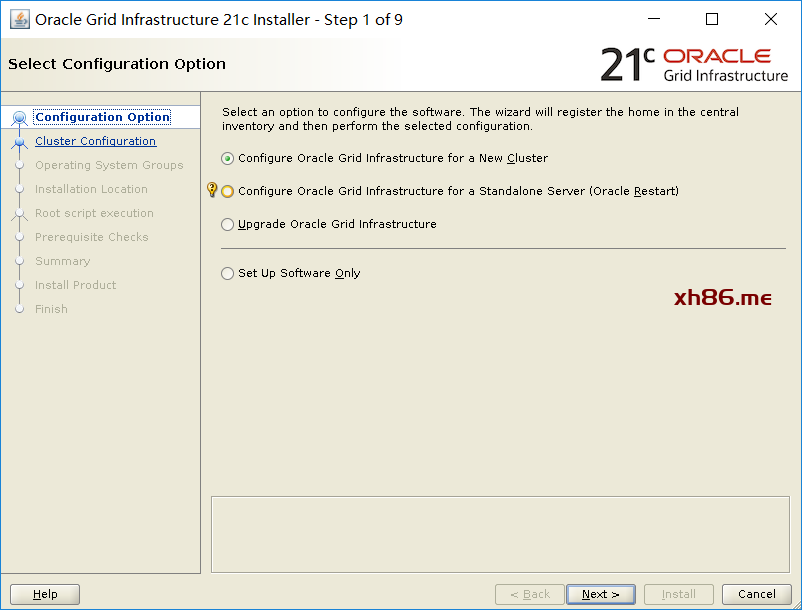

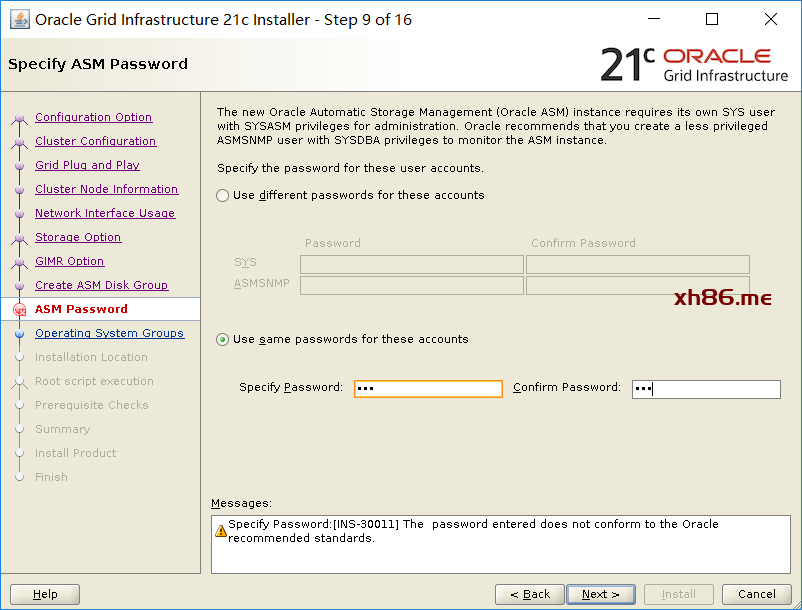

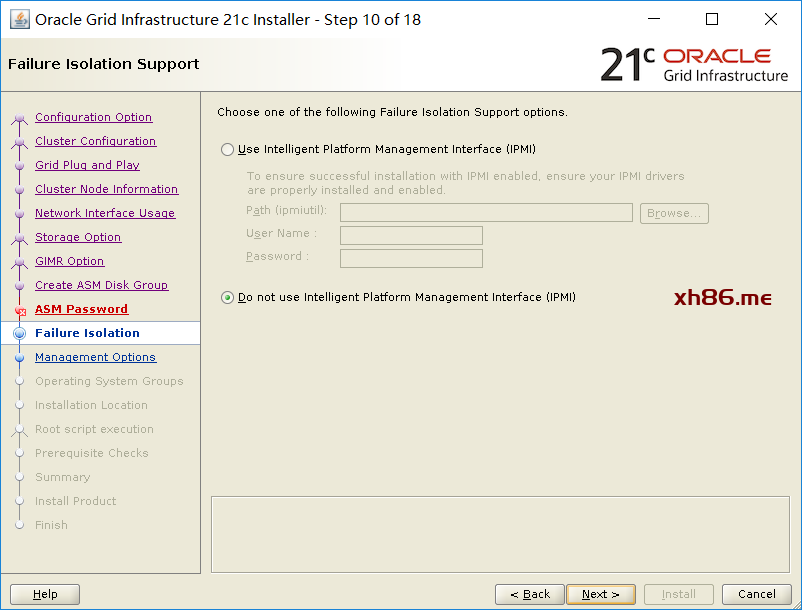

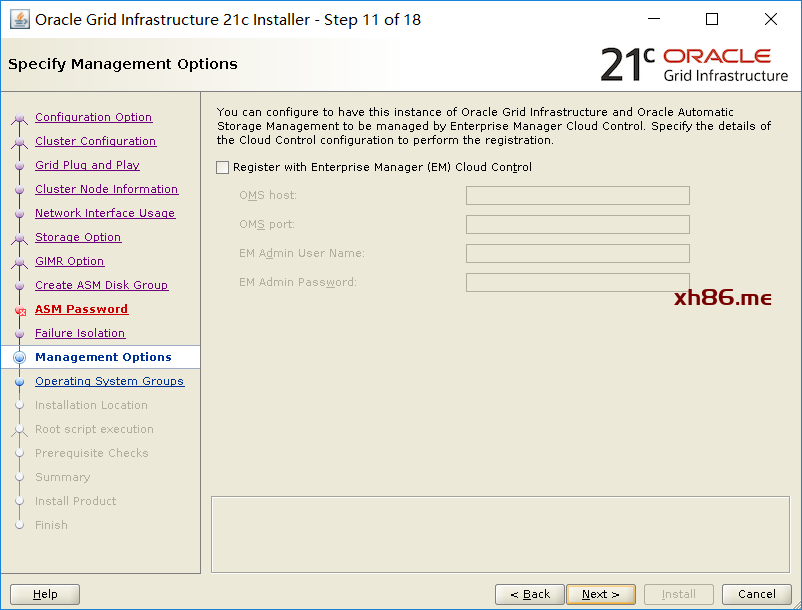

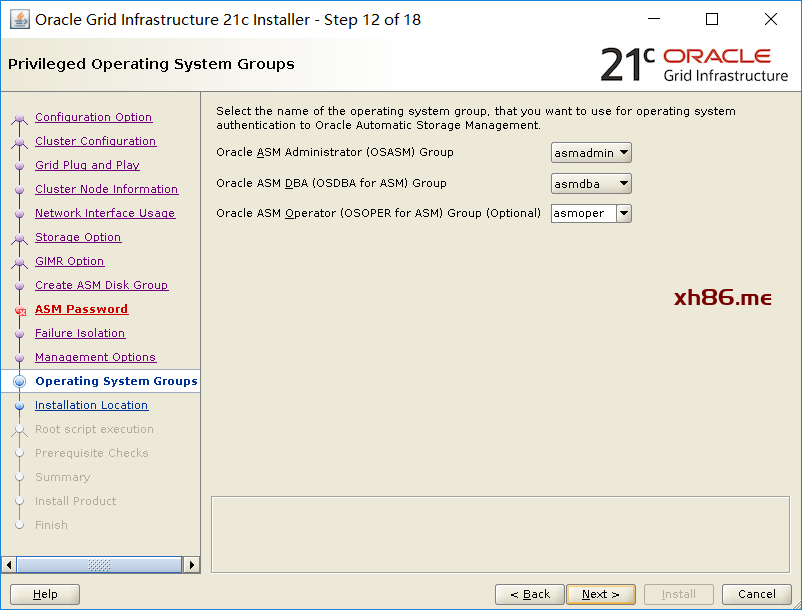

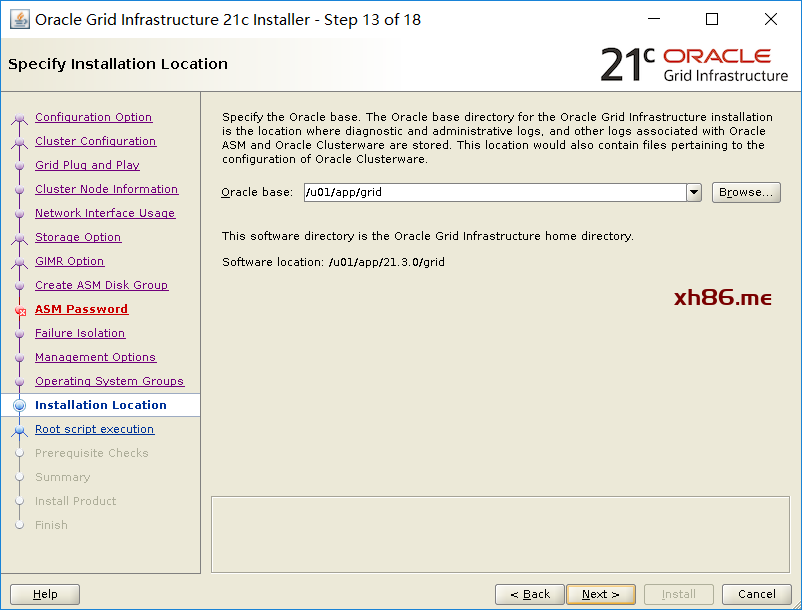

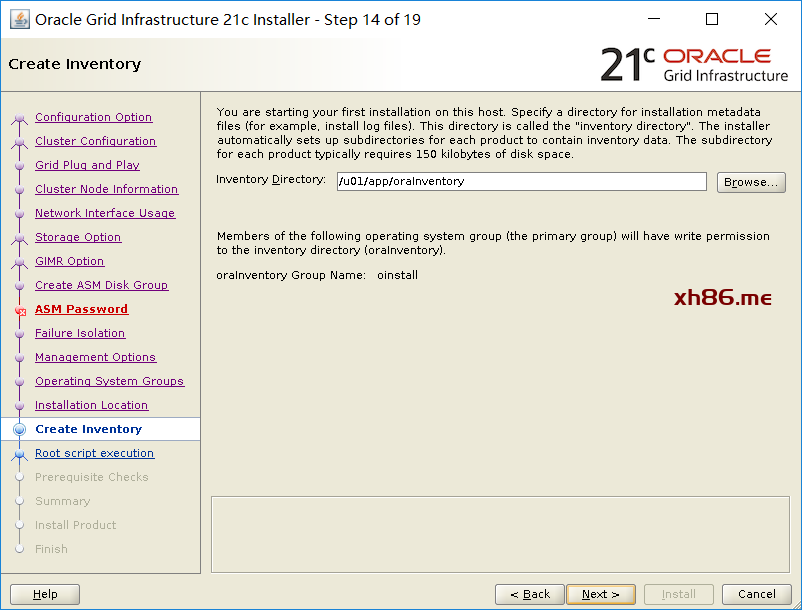

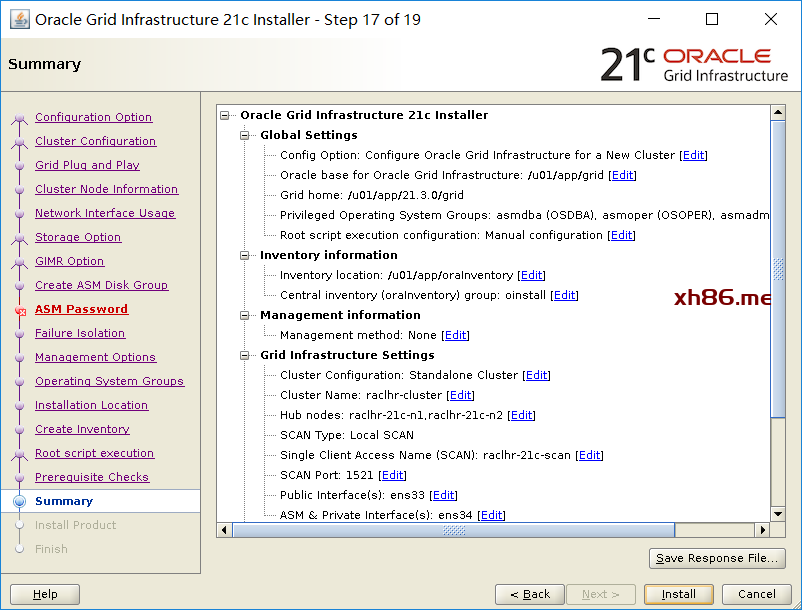

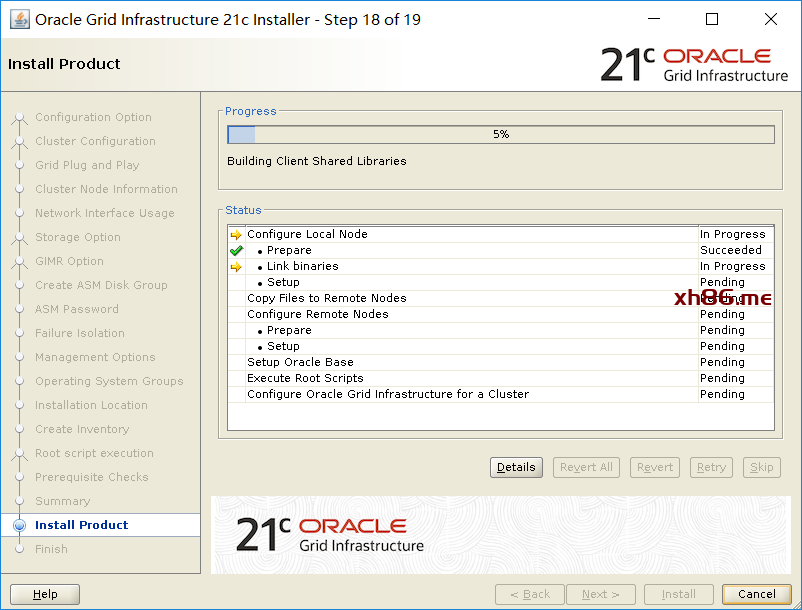

6.1、安装grid

注意:以grid用户登录,然后运行下面这个脚本,和之前版本的grid安装有所不同:

[grid@raclhr-21c-n1 ~]$ export DISPLAY=192.168.59.1:0.0

[grid@raclhr-21c-n1 ~]$ /u01/app/21.3.0/grid/gridSetup.sh

Launching Oracle Grid Infrastructure Setup Wizard…

点击add添加节点2后,点击next

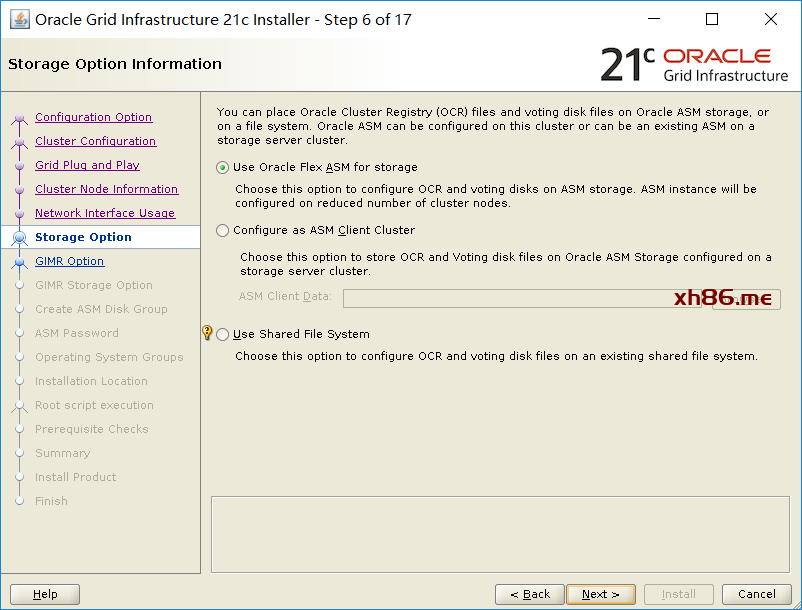

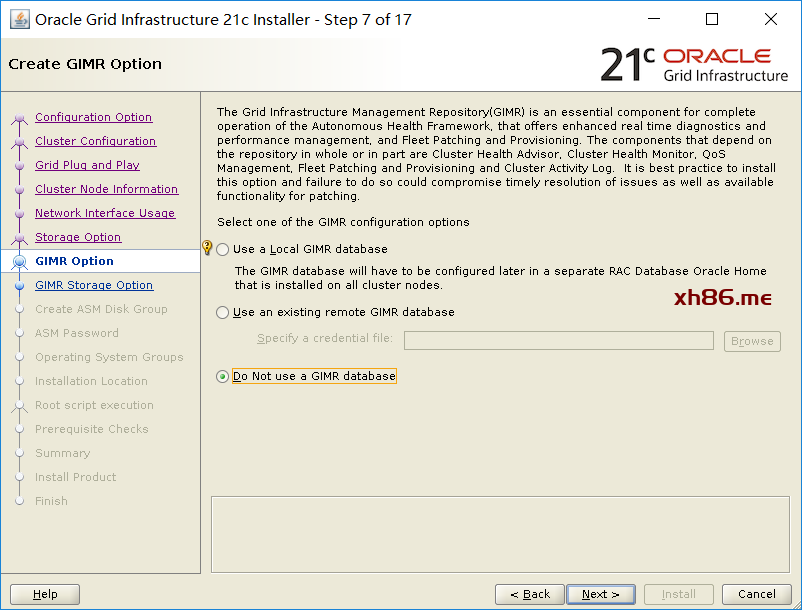

默认选中了不安装GIMR,我们也暂时不安装MIMR库:

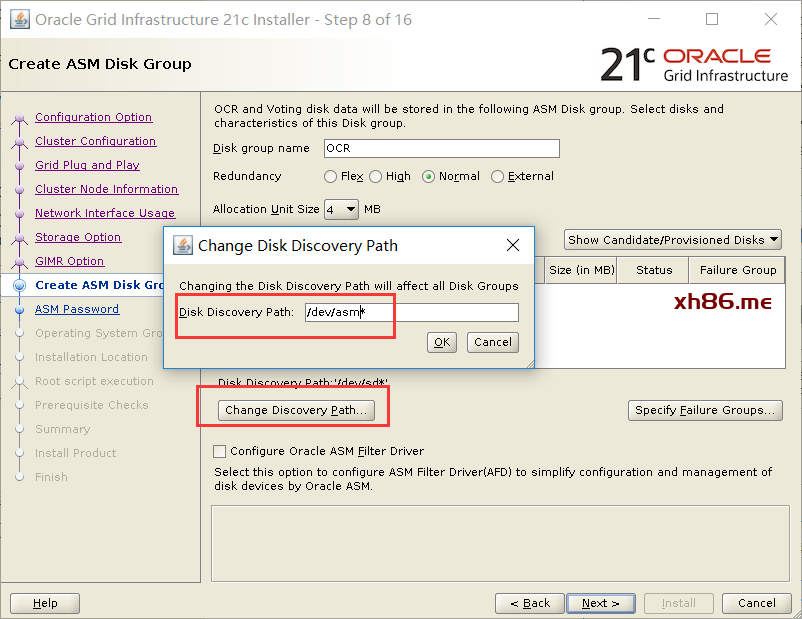

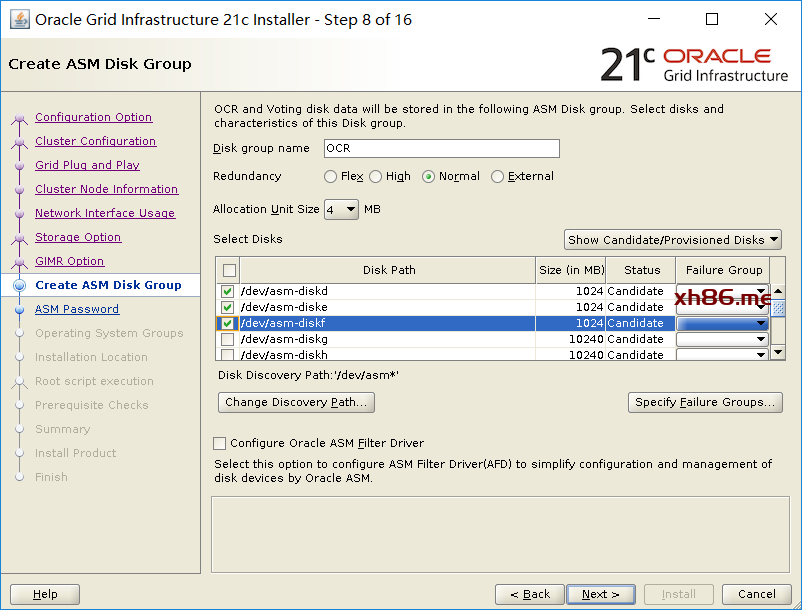

修改磁盘路径为“/dev/asm*”就可以找到ASM磁盘了。

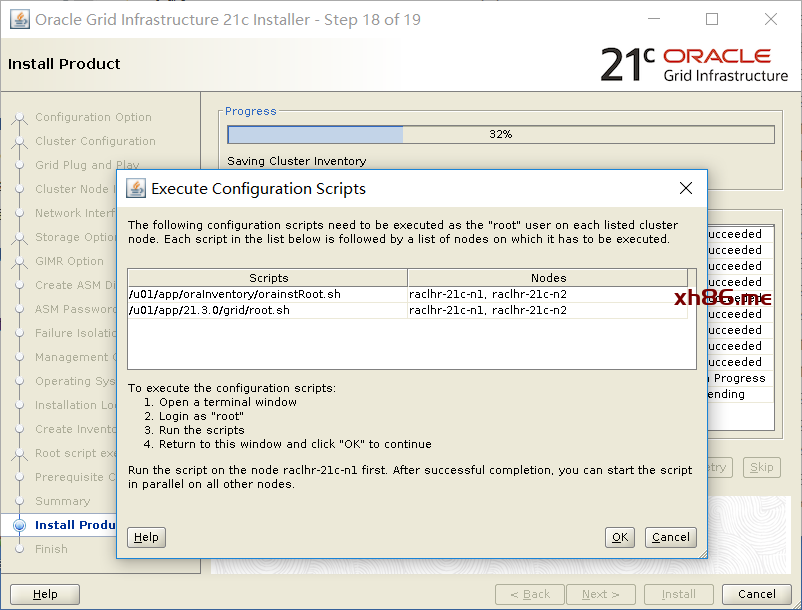

忽略所有错误继续安装

分别在节点1和节点2上执行:

/u01/app/oraInventory/orainstRoot.sh

/u01/app/21.3.0/grid/root.sh

节点1运行:

[root@raclhr-21c-n1 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@raclhr-21c-n1 ~]# /u01/app/21.3.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/21.3.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin …

Copying oraenv to /usr/local/bin …

Copying coraenv to /usr/local/bin …

Creating /etc/oratab file…

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/21.3.0/grid/crs/install/crsconfig_params

2021-08-20 08:38:46: Got permissions of file /u01/app/grid/crsdata/raclhr-21c-n1/crsconfig: 0775

2021-08-20 08:38:46: Got permissions of file /u01/app/grid/crsdata: 0775

2021-08-20 08:38:46: Got permissions of file /u01/app/grid/crsdata/raclhr-21c-n1: 0775

The log of current session can be found at:

/u01/app/grid/crsdata/raclhr-21c-n1/crsconfig/rootcrs_raclhr-21c-n1_2021-08-20_08-38-46AM.log

2021/08/20 08:38:56 CLSRSC-594: Executing installation step 1 of 19: ‘SetupTFA’.

2021/08/20 08:38:56 CLSRSC-594: Executing installation step 2 of 19: ‘ValidateEnv’.

2021/08/20 08:38:56 CLSRSC-594: Executing installation step 3 of 19: ‘CheckFirstNode’.

2021/08/20 08:38:58 CLSRSC-594: Executing installation step 4 of 19: ‘GenSiteGUIDs’.

2021/08/20 08:39:00 CLSRSC-594: Executing installation step 5 of 19: ‘SetupOSD’.

Redirecting to /bin/systemctl restart rsyslog.service

2021/08/20 08:39:00 CLSRSC-594: Executing installation step 6 of 19: ‘CheckCRSConfig’.

2021/08/20 08:39:00 CLSRSC-594: Executing installation step 7 of 19: ‘SetupLocalGPNP’.

2021/08/20 08:39:13 CLSRSC-594: Executing installation step 8 of 19: ‘CreateRootCert’.

2021/08/20 08:39:17 CLSRSC-594: Executing installation step 9 of 19: ‘ConfigOLR’.

2021/08/20 08:39:35 CLSRSC-594: Executing installation step 10 of 19: ‘ConfigCHMOS’.

2021/08/20 08:39:35 CLSRSC-594: Executing installation step 11 of 19: ‘CreateOHASD’.

2021/08/20 08:39:40 CLSRSC-594: Executing installation step 12 of 19: ‘ConfigOHASD’.

2021/08/20 08:39:41 CLSRSC-330: Adding Clusterware entries to file ‘oracle-ohasd.service’

2021/08/20 08:39:58 CLSRSC-4002: Successfully installed Oracle Autonomous Health Framework (AHF).

2021/08/20 08:40:14 CLSRSC-594: Executing installation step 13 of 19: ‘InstallAFD’.

2021/08/20 08:40:14 CLSRSC-594: Executing installation step 14 of 19: ‘InstallACFS’.

2021/08/20 08:40:19 CLSRSC-594: Executing installation step 15 of 19: ‘InstallKA’.

2021/08/20 08:40:24 CLSRSC-594: Executing installation step 16 of 19: ‘InitConfig’.

2021/08/20 08:41:52 CLSRSC-482: Running command: ‘/u01/app/21.3.0/grid/bin/ocrconfig -upgrade grid oinstall’

CRS-4256: Updating the profile

Successful addition of voting disk e4f4713a678d4f04bf0cce6c0c14692d.

Successful addition of voting disk 7f85f487c7e34f42bff66f6c7cfc6032.

Successful addition of voting disk 408b301b47c84f97bfc109558258b289.

Successfully replaced voting disk group with +OCR.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

— —– —————– ——— ———

1.ONLINE e4f4713a678d4f04bf0cce6c0c14692d (/dev/asm-diskd) [OCR]

2.ONLINE 7f85f487c7e34f42bff66f6c7cfc6032 (/dev/asm-diske) [OCR]

3.ONLINE 408b301b47c84f97bfc109558258b289 (/dev/asm-diskf) [OCR]

Located 3 voting disk(s).

2021/08/20 08:43:30 CLSRSC-594: Executing installation step 17 of 19: ‘StartCluster’.

2021/08/20 08:44:43 CLSRSC-343: Successfully started Oracle Clusterware stack

2021/08/20 08:44:43 CLSRSC-594: Executing installation step 18 of 19: ‘ConfigNode’.

2021/08/20 08:47:14 CLSRSC-594: Executing installation step 19 of 19: ‘PostConfig’.

2021/08/20 08:47:36 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster … succeeded

最后输出Configure Oracle Grid Infrastructure for a Cluster … succeeded表示成功,跑完后,检查一下:

[root@raclhr-21c-n1 ~]# crsctl stat res -t

——————————————————————————–

Name Target State Server State details

——————————————————————————–

Local Resources

——————————————————————————–

ora.LISTENER.lsnr

ONLINE ONLINE raclhr-21c-n1 STABLE

ora.chad

ONLINE ONLINE raclhr-21c-n1 STABLE

ora.net1.network

ONLINE ONLINE raclhr-21c-n1 STABLE

ora.ons

ONLINE ONLINE raclhr-21c-n1 STABLE

——————————————————————————–

Cluster Resources

——————————————————————————–

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE raclhr-21c-n1 STABLE

2 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.OCR.dg(ora.asmgroup)

1 ONLINE ONLINE raclhr-21c-n1 STABLE

2 OFFLINE OFFLINE STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE raclhr-21c-n1 STABLE

2 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE raclhr-21c-n1 STABLE

2 OFFLINE OFFLINE STABLE

ora.cdp1.cdp

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.cdp2.cdp

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.cdp3.cdp

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.cvu

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.qosmserver

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.raclhr-21c-n1.vip

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.scan1.vip

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.scan2.vip

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.scan3.vip

1 ONLINE ONLINE raclhr-21c-n1 STABLE

——————————————————————————–

节点2:

[root@raclhr-21c-n2 ~]# /u01/app/21.3.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/21.3.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of “dbhome” have not changed. No need to overwrite.

The contents of “oraenv” have not changed. No need to overwrite.

The contents of “coraenv” have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/21.3.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/raclhr-21c-n2/crsconfig/rootcrs_raclhr-21c-n2_2021-08-20_08-51-42AM.log

2021/08/20 08:51:48 CLSRSC-594: Executing installation step 1 of 19: ‘SetupTFA’.

2021/08/20 08:51:48 CLSRSC-594: Executing installation step 2 of 19: ‘ValidateEnv’.

2021/08/20 08:51:48 CLSRSC-594: Executing installation step 3 of 19: ‘CheckFirstNode’.

2021/08/20 08:51:50 CLSRSC-594: Executing installation step 4 of 19: ‘GenSiteGUIDs’.

2021/08/20 08:51:50 CLSRSC-594: Executing installation step 5 of 19: ‘SetupOSD’.

2021/08/20 08:51:50 CLSRSC-594: Executing installation step 6 of 19: ‘CheckCRSConfig’.

2021/08/20 08:51:51 CLSRSC-594: Executing installation step 7 of 19: ‘SetupLocalGPNP’.

2021/08/20 08:51:52 CLSRSC-594: Executing installation step 8 of 19: ‘CreateRootCert’.

2021/08/20 08:51:52 CLSRSC-594: Executing installation step 9 of 19: ‘ConfigOLR’.

2021/08/20 08:51:52 CLSRSC-594: Executing installation step 10 of 19: ‘ConfigCHMOS’.

2021/08/20 08:52:23 CLSRSC-594: Executing installation step 11 of 19: ‘CreateOHASD’.

2021/08/20 08:52:24 CLSRSC-594: Executing installation step 12 of 19: ‘ConfigOHASD’.

2021/08/20 08:52:25 CLSRSC-330: Adding Clusterware entries to file ‘oracle-ohasd.service’

2021/08/20 08:52:55 CLSRSC-594: Executing installation step 13 of 19: ‘InstallAFD’.

2021/08/20 08:52:55 CLSRSC-594: Executing installation step 14 of 19: ‘InstallACFS’.

2021/08/20 08:52:57 CLSRSC-594: Executing installation step 15 of 19: ‘InstallKA’.

2021/08/20 08:52:58 CLSRSC-594: Executing installation step 16 of 19: ‘InitConfig’.

2021/08/20 08:53:04 CLSRSC-4002: Successfully installed Oracle Autonomous Health Framework (AHF).

2021/08/20 08:53:08 CLSRSC-594: Executing installation step 17 of 19: ‘StartCluster’.

2021/08/20 08:54:09 CLSRSC-343: Successfully started Oracle Clusterware stack

2021/08/20 08:54:09 CLSRSC-594: Executing installation step 18 of 19: ‘ConfigNode’.

2021/08/20 08:54:28 CLSRSC-594: Executing installation step 19 of 19: ‘PostConfig’.

2021/08/20 08:54:38 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster … succeeded

最后输出Configure Oracle Grid Infrastructure for a Cluster … succeeded表示成功,查看:

[root@raclhr-21c-n1 ~]# crsctl stat res -t

——————————————————————————–

Name Target State Server State details

——————————————————————————–

Local Resources

——————————————————————————–

ora.LISTENER.lsnr

ONLINE ONLINE raclhr-21c-n1 STABLE

ONLINE ONLINE raclhr-21c-n2 STABLE

ora.chad

ONLINE ONLINE raclhr-21c-n1 STABLE

ONLINE ONLINE raclhr-21c-n2 STABLE

ora.net1.network

ONLINE ONLINE raclhr-21c-n1 STABLE

ONLINE ONLINE raclhr-21c-n2 STABLE

ora.ons

ONLINE ONLINE raclhr-21c-n1 STABLE

ONLINE ONLINE raclhr-21c-n2 STABLE

——————————————————————————–

Cluster Resources

——————————————————————————–

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE raclhr-21c-n1 STABLE

2 ONLINE ONLINE raclhr-21c-n2 STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE raclhr-21c-n2 STABLE

ora.OCR.dg(ora.asmgroup)

1 ONLINE ONLINE raclhr-21c-n1 STABLE

2 ONLINE ONLINE raclhr-21c-n2 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE raclhr-21c-n1 STABLE

2 ONLINE ONLINE raclhr-21c-n2 Started,STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE raclhr-21c-n1 STABLE

2 ONLINE ONLINE raclhr-21c-n2 STABLE

ora.cdp1.cdp

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.cdp2.cdp

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.cdp3.cdp

1 ONLINE ONLINE raclhr-21c-n2 STABLE

ora.cvu

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.qosmserver

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.raclhr-21c-n1.vip

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.raclhr-21c-n2.vip

1 ONLINE ONLINE raclhr-21c-n2 STABLE

ora.scan1.vip

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.scan2.vip

1 ONLINE ONLINE raclhr-21c-n1 STABLE

ora.scan3.vip

1 ONLINE ONLINE raclhr-21c-n2 STABLE

——————————————————————————–

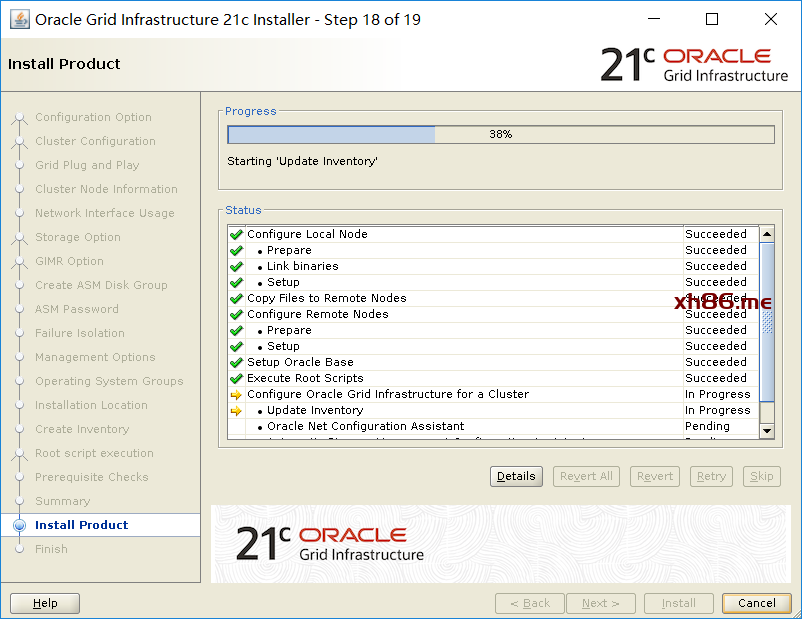

此时回到界面点击OK,继续运行:

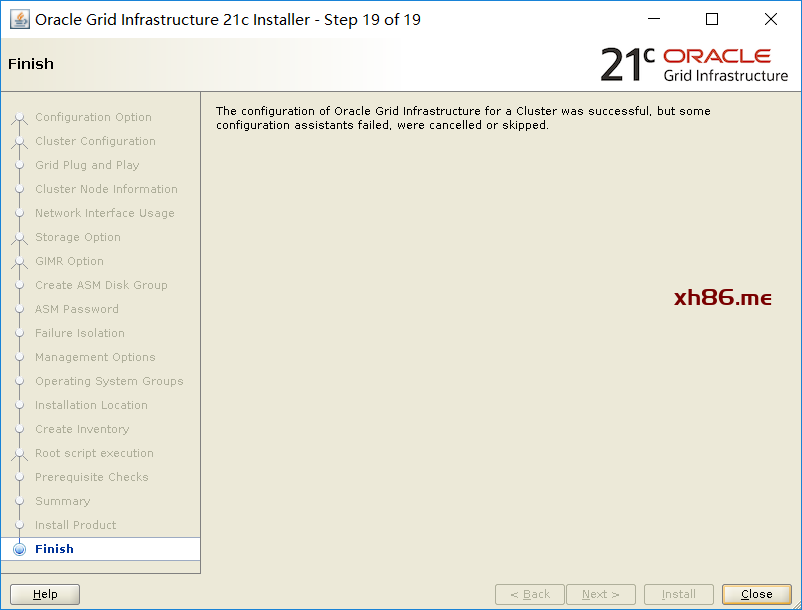

最后集群校验报错,看了一下是dns和ntp的问题,可以忽略,继续安装:

6.2、安装db

[root@raclhr-21c-n1 ~]# su – oracle

Last login: Thu Aug 19 15:26:38 CST 2021 on pts/0

[oracle@raclhr-21c-n1 ~]$ cd $ORACLE_HOME

[oracle@raclhr-21c-n1 dbhome_1]$ export DISPLAY=192.168.59.1:0.0

[oracle@raclhr-21c-n1dbhome_1]$./runInstaller

节点1和节点2分别执行:

[root@raclhr-21c-n1 ~]# /u01/app/oracle/product/21.3.0/dbhome_1/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/21.3.0/dbhome_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of “dbhome” have not changed. No need to overwrite.

The contents of “oraenv” have not changed. No need to overwrite.

The contents of “coraenv” have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

执行完后,点击OK:

6.3、创建磁盘组

以 grid 用户执行 asmca 命令,创建DATA和FRA两个磁盘组。:

[root@raclhr-21c-n1 ~]# su – grid

Last login: Fri Aug 20 09:59:53 CST 2021

[grid@raclhr-21c-n1 ~]$ export DISPLAY=192.168.59.1:0.0

[grid@raclhr-21c-n1 ~]$ asmca

查看:

[grid@raclhr-21c-n1 ~]$ $ORACLE_HOME/bin/kfod disks=all st=true ds=true

——————————————————————————–

Disk Size Header Path Disk Group User Group

================================================================================

1: 1024 MB MEMBER /dev/asm-diskd OCR grid asmadmin

2: 1024 MB MEMBER /dev/asm-diske OCR grid asmadmin

3: 1024 MB MEMBER /dev/asm-diskf OCR grid asmadmin

4: 10240 MB CANDIDATE /dev/asm-diskg # grid asmadmin

5: 10240 MB CANDIDATE /dev/asm-diskh # grid asmadmin

6: 10240 MB CANDIDATE /dev/asm-diski # grid asmadmin

7: 15360 MB MEMBER /dev/asm-diskj DATA grid asmadmin

8: 15360 MB MEMBER /dev/asm-diskk DATA grid asmadmin

9: 15360 MB MEMBER /dev/asm-diskl DATA grid asmadmin

10: 10240 MB MEMBER /dev/asm-diskm FRA grid asmadmin

11: 10240 MB MEMBER /dev/asm-diskn FRA grid asmadmin

12: 10240 MB MEMBER /dev/asm-disko FRA grid asmadmin

——————————————————————————–

ORACLE_SID ORACLE_HOME

================================================================================

6.4、创建数据库

[oracle@raclhr-21c-n1 ~]$ export DISPLAY=192.168.59.1:0.0

[oracle@raclhr-21c-n1 ~]$ dbca

七、静默安装集群和db

安装之前重启一次OS,并检查网络和共享盘是否正确。

7.1、静默安装grid

/u01/app/21.3.0/grid/gridSetup.sh -silent -force -noconfig -ignorePrereq \

oracle.install.responseFileVersion=/oracle/install/rspfmt_crsinstall_response_schema_v21.0.0 \

INVENTORY_LOCATION=/u01/app/oraInventory \

oracle.install.option=CRS_CONFIG \

ORACLE_BASE=/u01/app/grid \

oracle.install.asm.OSDBA=asmdba \

oracle.install.asm.OSOPER=asmoper \

oracle.install.asm.OSASM=asmadmin \

oracle.install.crs.config.scanType=LOCAL_SCAN \

oracle.install.crs.config.gpnp.scanName=raclhr-21c-scan \

oracle.install.crs.config.gpnp.scanPort=1521 \

oracle.install.crs.config.ClusterConfiguration=STANDALONE \

oracle.install.crs.config.configureAsExtendedCluster=false \

oracle.install.crs.config.clusterName=raclhr-cluster \

oracle.install.crs.config.gpnp.configureGNS=false \

oracle.install.crs.config.autoConfigureClusterNodeVIP=false \

oracle.install.crs.config.clusterNodes=raclhr-21c-n1:raclhr-21c-n1-vip,raclhr-21c-n2:raclhr-21c-n2-vip \

oracle.install.crs.config.networkInterfaceList=ens34:192.168.2.0:5,ens33:192.168.59.0:1 \

oracle.install.asm.configureGIMRDataDG=false \

oracle.install.crs.config.useIPMI=false \

oracle.install.asm.storageOption=ASM \

oracle.install.asm.SYSASMPassword=lhr \

oracle.install.asm.diskGroup.name=OCR \

oracle.install.asm.diskGroup.redundancy=NORMAL \

oracle.install.asm.diskGroup.AUSize=4 \

oracle.install.asm.diskGroup.disks=/dev/asm-diskd,/dev/asm-diske,/dev/asm-diskf \

oracle.install.asm.diskGroup.diskDiscoveryString=/dev/asm-* \

oracle.install.asm.monitorPassword=lhr \

oracle.install.asm.configureAFD=false \

oracle.install.crs.configureRHPS=false \

oracle.install.crs.config.ignoreDownNodes=false \

oracle.install.config.managementOption=NONE \

oracle.install.crs.rootconfig.executeRootScript=false

执行过程:

[grid@raclhr-21c-n1 ~]$ /u01/app/21.3.0/grid/gridSetup.sh -silent -force -noconfig -ignorePrereq \

> oracle.install.responseFileVersion=/oracle/install/rspfmt_crsinstall_response_schema_v21.0.0 \

> INVENTORY_LOCATION=/u01/app/oraInventory \

> oracle.install.option=CRS_CONFIG \

> ORACLE_BASE=/u01/app/grid \

> oracle.install.asm.OSDBA=asmdba \

> oracle.install.asm.OSOPER=asmoper \

> oracle.install.asm.OSASM=asmadmin \

> oracle.install.crs.config.scanType=LOCAL_SCAN \

> oracle.install.crs.config.gpnp.scanName=raclhr-21c-scan \

> oracle.install.crs.config.gpnp.scanPort=1521 \

> oracle.install.crs.config.ClusterConfiguration=STANDALONE \

> oracle.install.crs.config.configureAsExtendedCluster=false \

> oracle.install.crs.config.clusterName=raclhr-cluster \

> oracle.install.crs.config.gpnp.configureGNS=false \

> oracle.install.crs.config.autoConfigureClusterNodeVIP=false \